Edge in the News

Chi l’ha detto che gli scienziati sono catastrofisti? Al massimo saranno tali quelli dell’Ipcc, l’ente delle Nazioni Unite incaricato di studiare i cambiamenti climatici. O qualche virologo dell’Organizzazione mondiale della sanità, troppo lesto nell’annunciare pandemie. Ma sono eccezioni. In linea di massima gli scienziati sono inguaribili ottimisti, lo sguardo rivolto al futuro, certi in cuore di avere le idee giuste per mettere a posto due o tre cosette che non vanno o che ancora non si conoscono.

Il Saggiatore porta in libreria in questi giorni 153 ragioni per essere ottimisti (pagg. 424, euro 21) a cura di John Brockman. Un gruppo di scienziati risponde alla stessa domanda: «Cosa ti rende ottimista?». Fra loro vi sono molti personaggi notissimi, ad esempio Jared Diamond, Richard Dawkins, Lisa Randall, Ray Kurzweil, Gino Segré, Brian Eno, Daniel C. Dennett, Lawrence M. Krauss. Ecco quindi un menu alla carta con ricette per risolvere i problemi energetici, democratizzare l’economia globale, aumentare la trasparenza governativa, debellare le dispute religiose, ridurre la fame nel mondo, potenziare la nostra intelligenza, sconfiggere la malattia, progredire nella morale, migliorare il concetto di amicizia, trascendere le nostre radici darwiniane, capire la legge fondamentale dell’universo, unificare tutti i saperi, abbattere il terrorismo, colonizzare Marte.

Certo, ci sono alcune discrepanze. Ad esempio Richard Dawkins è ottimista perché da qualche laboratorio salterà fuori la legge del tutto, la teoria finale, capace di spiegare ogni fenomeno fisico. Franck Wilzeck è invece ottimista per il motivo opposto: grazie a Dio nessuno sembra in grado di approdare al risultato auspicato da Dawkins, e il mondo continuerà a sorprenderci ancora a lungo. C’è chi è ottimista perché finalmente la religione sarà bollata come pura e semplice superstizione. E c’è chi rovescia la frittata e si dice ottimista perché finalmente la scienza riconoscerà che la religione non può essere bollata come pura e semplice superstizione. Insomma, è garantita una varietà d’opinioni bipartisan.

Tra i problemi più dibattuti, c’è quello della pace. Affrontato da tutti i punti di vista. Per gli antropologi, ad esempio, è un dato di fatto statistico: andiamo verso la fine della guerra. Sul XX secolo grava il sangue di cento milioni di vittime (calcolo di Lawrence Keeley in War Before Civilization). Una cifra spaventosa. Eppure sarebbero state due miliardi se i nostri tassi di violenza fossero pari a quelli di una società primitiva media, in cui il tasso di mortalità causato dalla violenza raggiungeva il cinquanta per cento. Per i neuroscienziati, è anche un problema di cervello e ormoni sessuali. Roger Bingham, ad esempio, è ottimista perché un numero maggiore di donne rispetto al passato siede al tavolo delle trattative sul controllo degli armamenti. Le pari opportunità non c’entrano. Il punto è che la sicurezza globale, in un’atmosfera carica di testosterone, risulterebbe meno garantita. Per i biologi, pur essendo gli uomini «programmati» per distinguere fra Noi e Loro (cioè fra buoni e cattivi), le categorie sono destinate a diventare sfumate grazie all’evoluzione.

When you look at young people like the ones who grew up to blow up trains in Madrid in 2004, carried out the slaughter on the London underground in 2005, hoped to blast airliners out of the sky en route to the United States in 2006 and 2009, and journeyed far to die killing infidels in Iraq, Afghanistan, Pakistan, Yemen or Somalia; when you look at whom they idolize, how they organize, what bonds them and what drives them; then you see that what inspires the most lethal terrorists in the world today is not so much the Koran or religious teachings as a thrilling cause and call to action that promises glory and esteem in the eyes of friends, and through friends, eternal respect and remembrance in the wider world that they will never live to enjoy.

Our data show that most young people who join the jihad had a moderate and mostly secular education to begin with, rather than a radical religious one. And where in modern society do you find young people who hang on the words of older educators and "moderates"? Youth generally favors actions, not words, and challenge, not calm. That's a big reason so many who are bored, underemployed, overqualified, and underwhelmed by hopes for the future turn on to jihad with their friends. Jihad is an egalitarian, equal-opportunity employer (at least for boys, but girls are web-surfing into the act): fraternal, fast-breaking, thrilling, glorious, and cool. Anyone is welcome to try his hand at slicing off the head of Goliath with a paper cutter.

If we can discredit their vicious idols (show how these bring murder and mayhem to their own people) and give these youth new heroes who speak to their hopes rather than just to ours, then we've got a much better shot at slowing the spread of jihad to the next generation than we do just with bullets and bombs. And if we can de-sensationalize terrorist actions, like suicide bombings, and reduce their fame (don't help advertise them or broadcast our hysterical response, for publicity is the oxygen of terrorism), the thrill will die down. As Saudi Arabia's General Khaled Alhumaidan said to me in Riyadh: "The front is in our neighborhoods but the battle is the silver screen. If it doesn't make it to the 6'oclock news, then Al Qaeda is not interested." Thus, the terrorist agenda could well extinguish itself altogether, doused by its own cold raw truth: it has no life to offer. This path to glory leads only to ashes and rot.

In the long run, perhaps the most important anti-terrorism measure of all is to provide alternative heroes and hopes that are more enticing and empowering than any moral lessons or material offerings. Jobs that relieve the terrible boredom and inactivity of immigrant youth in Europe, and with underemployed throughout much of the Muslim world, cannot alone offset the alluring stimulation of playing at war in contexts of continued cultural and political alienation and little sense of shared aspirations and destiny. It is also important to provide alternate local networks and chat rooms that speak to the inherent idealism, sense of risk and adventure, and need for peer approval that young people everywhere tend towards. It even could be a 21st-century version of what the Boy Scouts and high school football teams did for immigrants and potentially troublesome youth as America urbanized a century ago. Ask any cop on the beat: those things work. But it has to be done with the input and insight of local communities or it won't work: de-radicalization, like radicalization itself, best engages from the bottom up, not from the top down.

In sum, there are many millions of people who express sympathy with Al Qaeda or other forms of violent political expression that support terrorism. They are stimulated by a massive, media-driven global political awakening which, for the first time in human history, can "instantly" connect anyone, anywhere to a common cause -- provided the message that drives that cause is simple enough not to require much cultural context to understand it: for example, the West is everywhere assaulting Muslims, and Jihad is the only the way to permanently resolve glaring problems caused by this global injustice.

Consider the parable told by the substitute Imam at the Al Quds Mosque in Hamburg, where the 9/11 bomber pilots hung out, when Marc Sageman and I asked him "Why did they do it?"

"There were two rams, one with horns and one without. The one with horns butted his head against the defenseless one. In the next world, Allah switched the horns from one ram to the other, so justice could prevail."

"Justice" ('adl in Arabic) is the watchword of Jihad. Thunderously simple. When justice and Jihad and are joined to "change" -- the elemental soundbite of our age -- and oxygenated by the publicity given to spectacular acts of violence, then the mix becomes heady and potent.

Young people constantly see and discuss among themselves images of war and injustice against "our people," become morally outraged (especially if injustice resonates personally, which is more of a problem abroad than at home), and dream of a war for justice that gives their friendship cause. But of the millions who sympathize with the jihadi cause, only some thousands show willingness to actually commit violence. They almost invariably go on to violence in small groups of volunteers consisting mostly of friends and some kin within specific "scenes": neighborhoods, schools (classes, dorms), workplaces, common leisure activities (soccer, study group, barbershop, café) and, increasingly, online chat-rooms.

Does Europe especially need to reconsider their approach to the Internet? EDGE would say yes:

Edge: TIME TO START TAKING THE INTERNET SERIOUSLY By David Gelernter: "Introduction: Our Algorithmic Culture" by John Brockman:

"Edge was in Munich in January for DLD 2010 and an Edge/DLD event entitled 'Informavore' — a discussion featuring Frank Schirrmacher, Editor of the Feuilleton and Co-Publisher of Frankfurter Allgemeine Zeitung, Andrian Kreye, Feuilleton Editor of Sueddeutsche Zeitung, Munich; and Yale computer science visionary David Gelernter, who, in his 1991 book Mirror Worlds presented what's now called 'cloud computing.'

The intent of the panel was to discuss — for the benefit of a German audience — the import of the recent Frank Schirrmacher interview on Edge entitled 'The Age of the Informavore.' David Gelernter, who predicted the Web, and who first presented the idea of 'the cloud', was the scientist on the panel along with Schirrmacher and Kreye, Feuilleton editors of the two leading German national newspapers, both distinguished intellectuals....

Take a look at the photos from the recent Edge annual dinner and you will find the people who are re-writing global culture, and also changing your business, and, your head. What do Evan Williams (Twitter), Larry Page (Google), Tim Berners-Lee (World Wide Web Consortium), Sergey Brin (Google), Bill Joy (Sun), Salar Kamangar (Google), Keith Coleman (Google Gmail), Marissa Mayer (Google), Lori Park (Google), W. Daniel Hillis (Applied Minds), Nathan Myhrvold (Intellectual Ventures), Dave Morin (formerly Facebook), Michael Tchao (Apple iPad), Tony Fadell (Apple/iPod), Jeff Skoll (formerly eBay), Chad Hurley (YouTube), Bill Gates (Microsoft), Jeff Bezos (Amazon) have in common? All are software engineers or scientists.

So what's the point? It's a culture. Call it the algorithmic culture. To get it, you need to be part of it, you need to come out of it. Otherwise, you spend the rest of your life dancing to the tune of other people's code. Just look at Europe where the idea of competition in the Internet space appears to focus on litigation, legislation, regulation, and criminalization. [emphasis added]"

Those of us involved in communicating ideas need to re-think the Internet. Here at Edge, we are not immune to such considerations. We have to ask if we’re kidding ourselves by publishing 10,000+ word pieces to be read by people who are limiting themselves to 3″ ideas, i.e. the width of the screen of their iPhones and Blackberries. (((And if they’re kidding THEMSELVES, what do you suppose they’re doing to all those guys with the handsets?)))

Many of the people that desperately need to know, don’t even know that they don’t know. Book publishers, confronted by the innovation of technology companies, are in a state of panic. Instead of embracing the new digital reading devices as an exciting opportunity, the default response is to disadvantage authors. Television and cable networks are dumbfounded by the move of younger people to watch TV on their computers or cell-phones. Newspapers and magazine publishers continue to see their advertising model crumble and have no response other than buyouts.

Take a look at the photos from the recent Edge annual dinner and you will find the people who are re-writing global culture, and also changing your business, and, your head. What do Evan Williams (Twitter), Larry Page (Google), Tim Berners-Lee (World Wide Web Consortium), Sergey Brin (Google), Bill Joy (Sun), Salar Kamangar (Google), Keith Coleman (Google Gmail), Marissa Mayer (Google), Lori Park (Google), W. Daniel Hillis (Applied Minds), Nathan Myhrvold (Intellectual Ventures), Dave Morin (formerly Facebook), Michael Tchao (Apple iPad), Tony Fadell (Apple/iPod), Jeff Skoll (formerly eBay), Chad Hurley (YouTube), Bill Gates (Microsoft), Jeff Bezos (Amazon) have in common? All are software engineers or scientists.

(((So… if we can just round up and liquidate these EDGE conspirators, then us authors are out of the woods, right? I mean, that would seem to be a clear implication.)))

So what’s the point? It’s a culture. Call it the algorithmic culture.

(((Even if we rounded ‘em up, I guess we’d still have to fret about those ALGORITHMS they built. Did you ever meet an algorithm with a single spark of common sense or humane mercy? I for one welcome our algorithmic overlords.)))

To get it, you need to be part of it, you need to come out of it. Otherwise, you spend the rest of your life dancing to the tune of other people’s code. (((Not to mention all that existent code written by dead guys. Or ultrarich code-monkey guys who knocked it off and went to cure malaria.)))

Just look at Europe where the idea of competition in the Internet space appears to focus on litigation, legislation, regulation, and criminalization. (((I indeed DO look at Europe, and I gotta say that their broadband rocks. The Italians even have the nerve to round up the occasional Google engineer.)))

Gelernter writes:

The Internet is no topic like cellphones or videogame platforms or artificial intelligence; it’s a topic like education. It’s that big. Therefore beware: to become a teacher, master some topic you can teach; don’t go to Education School and master nothing. To work on the Internet, master some part of the Internet: engineering, software, computer science, communication theory; economics or business; literature or design. Don’t go to Internet School and master nothing….

Some of you surely read and remember Alvin Toffler’s 1970 bestseller, “Future Shock.” Although he remains in the background, Toffler is often noted as one of the world’s greatest futurologists and influential men. His main thesis in “Future Shock” was encapsulated in his saying we face “too much change in too short a period of time,” and that we are unprepared for it individually or as a society.

Today we still are, and we call it “information overload.” I often see it as a form of trivial pursuit; mindless talk on cell phones, mindless games on computers, and mindless drives for trivial things, while important things are unsaid or ignored.

Toffler anticipated the computer revolution, cloning, family fragmentation, cable TV, VCRs, satellites and other things we take for common or create controversy today. He had some interesting recommendations, only one of which I’ll mention here. That is, he believed the needed reformation of the education system could not be made by tinkering but be doing away with what existed – and exists still – and starting from scratch so as to teach preparedness for change.

He said, “The illiterates of the 21st century will not be those who cannot read and write, but those who cannot learn, unlearn, and relearn.”

So what have we done? We tinkered and tinkered and tinkered, and its still broke.

I’m currently reading a 2009 book that reminded me of Toffler’s work, and if he foresaw change 40 years ago, the new book should jolt us with excitement and worry with its anticipated future. The new book is “This Will Change Everything: Ideas that will shape the future,” edited by John Brockman.

It is a compilation of short essays, usually about one-and-a-half to three pages long, by 125 of today’s leading thinkers. They were responding to the question, “What game-changing scientific ideas and developments do you expect to live to see?”

Their answers are seen more as speculative than predictive, but they certainly continue Toffler’s work by bringing us up to date. As I mention just some of their ideas, consider this a book review and an urging of you to read the book, because even more change is coming.

Robots are popular in science fiction, and the essayists don’t ignore them. They don’t just foresee simple robots that will clean the house, make dinner and take out the garbage. Some see “relational” robots, humanoid in shape and feature, but with an artificial intelligence that allows them to act human, to learn, grow, develop and enter into relations, all at a rate of change much greater than humans are capable of.

Some foresee humans will want to marry robots.

With the current controversy about same-sex marriages, imagine the ethical and legal questions of human-robot marriages! Of these and other changes in robotics, one essayist says, “If we are lucky, our new mind children (the robots) will treat us as pets. If we are very unlucky, they will treat us as food.”

Some foresee quantum computers with power far beyond our current computers. One author suggests computers will be so powerful, and brain scanning so advanced, that brain scans will be taken of humans, mapping the billions of neurons and trillions of synapses and saving them, so that the essence of you will be kept in a computer, even suggesting that “you” will be able to watch yourself die if you choose to, since the saved “you” will be able to continue full mental activity.

Others foresee life spans of 200-1,000 years and even immortality. More ethical and legal questions. Are we ready for them?

Work on the human genome and the genomes of other animals will make it possible, as one essayist describes, to break the species barrier. If we could, should we?

Personalized medicine, based on our individual genomes and physiology will be possible. No more Prozac for all with depression, but individual treatments and medicines, specific just for you, and you, and you. How will we deal with the question of who can afford such treatments? Talk about our current health care controversies!

We have searched for extra-terrestrial life for decades (and again, science fiction has been written about that, too. Toffler recommends reading science fiction as a way of learning of change). Essayists believe that we will eventually find extra-terrestrial life, and its form and chemistry basis will have the impact of totally changing our view of who we are and where we fit in the universe.

We know that if extra-terrestrial visitors came to Earth, they would be much more advanced than us. How would you react one day waking up to the morning news that such aliens had landed on earth? Would the people of the Earth finally come together as one humanity? Or would they seek to curry favor with the aliens as separate human nations? Would such an event truly be “The Day the Earth Stood Still”?

Geo-engineering, nuclear applications, cryo-technology, bioengineering, neuro-cosmetics, and many other topics are covered. A philosopher whose name is now forgotten, once suggested that whatever humans can conceive of and invent, they will use.

Think A-bomb. Does this have to be so?

Another essayist said, “We keep rounding an endless vicious circle. Will an idea or technology emerge anytime soon that will let us exit this lethal cyclotron before we meet our fate head-on and scatter into a million pieces? Will we outsmart our own brilliance before this planet is painted over with yet another layer of people? Maybe, but I doubt it.”

As for the book, try it; I don’t know that you’ll like it, but it’s important and real.

Change is coming!

The big science and tech thinkers in the orbit of Edge.org recently held a grand dinner in California, on the theme of "A New Age of Wonder." The title was taken from a Freeman Dyson essay reflecting on how the 19th century Romantics encountered science, in which the following passage appeared

"...a new generation of artists, writing genomes as fluently as Blake and Byron wrote verses, might create an abundance of new flowers and fruit and trees and birds to enrich the ecology of our planet. Most of these artists would be amateurs, but they would be in close touch with science, like the poets of the earlier Age of Wonder. The new Age of Wonder might bring together wealthy entrepreneurs like Venter and Kamen ... and a worldwide community of gardeners and farmers and breeders, working together to make the planet beautiful as well as fertile, hospitable to hummingbirds as well as to humans."

Dyson goes on:

Is it possible that we are now entering a new Romantic Age, extending over the first half of the twenty-first century, with the technological billionaires of today playing roles similar to the enlightened aristocrats of the eighteenth century? It is too soon now to answer this question, but it is not too soon to begin examining the evidence. The evidence for a new Age of Wonder would be a shift backward in the culture of science, from organizations to individuals, from professionals to amateurs, from programs of research to works of art.

If the new Romantic Age is real, it will be centered on biology and computers, as the old one was centered on chemistry and poetry.

We do live in an age of technological miracles and scientific wonder. Who can deny it? And yet, and yet! Dyson again, from the same essay:

If the dominant science in the new Age of Wonder is biology, then the dominant art form should be the design of genomes to create new varieties of animals and plants. This art form, using the new biotechnology creatively to enhance the ancient skills of plant and animal breeders, is still struggling to be born. It must struggle against cultural barriers as well as technical difficulties, against the myth of Frankenstein as well as the reality of genetic defects and deformities.

Here's where these techno-utopians lose me, and lose me big time. The myth of Frankenstein is important precisely because it is a warning against the hubris of scientists who wish to extend their formidable powers over the essence of human life, and in so doing eliminate what it means to be human. And here is a prominent physicist waxing dreamily about the way biotech can be used to create works of art out of living creatures, aestheticizing the very basis of life on earth. If that doesn't cause you to shudder, you aren't taking it seriously enough. I think of this Jody Bottum essay from 10 years back, which begins thus [read after the jump]:

On Thursday, October 5, it was revealed that biotechnology researchers had successfully created a hybrid of a human being and a pig. A man-pig. A pig-man. The reality is so unspeakable, the words themselves don't want to go together.

Extracting the nuclei of cells from a human fetus and inserting them into a pig's egg cells, scientists from an Australian company called Stem Cell Sciences and an American company called Biotransplant grew two of the pig-men to 32-cell embryos before destroying them. The embryos would have grown further, the scientists admitted, if they had been implanted in the womb of either a sow or a woman. Either a sow or a woman. A woman or a sow.

There has been some suggestion from the creators that their purpose in designing this human pig is to build a new race of subhuman creatures for scientific and medical use. The only intended use is to make animals, the head of Stem Cell Sciences, Peter Mountford, claimed last week, backpedaling furiously once news of the pig-man leaked out of the European Union's patent office. Since the creatures are 3 percent pig, laws against the use of people as research would not apply. But since they are 97 percent human, experiments could be profitably undertaken upon them and they could be used as living meat-lockers for transplantable organs and tissue.

But then, too, there has been some suggestion that the creators' purpose is not so much to corrupt humanity as to elevate it. The creation of the pig-man is proof that we can overcome the genetic barriers that once prevented cross-breeding between humans and other species. At last, then, we may begin to design a new race of beings with perfections that the mere human species lacks: increased strength, enhanced beauty, extended range of life, immunity from disease. "In the extreme theoretical sense," Mountford admitted, the embryos could have been implanted into a woman to become a new kind of human-though, of course, he reassured the Australian media, something like that would be "ethically immoral, and it's not something that our company or any respectable scientist would pursue."

But what difference does it make whether the researchers' intention is to create subhumans or superhumans? Either they want to make a race of slaves, or they want to make a race of masters. And either way, it means the end of our humanity.

The thing I don't get about the starry-eyed techno-utopians is that they don't seem to have taken sufficient notice of World War I, the Holocaust, and Hiroshima. That is, they don't seem to have absorbed the lessons of what the 20th century taught us about human nature, science and technology. Science is a tool that extends human powers over the natural world. It does not change human nature. The two wars and the Holocaust should have once and forever demolished naive optimism about human nature, and what humankind is capable of with its scientific knowledge. Obviously humankind is also capable of putting that knowledge to work to accomplish great good. That is undeniable -- but one is not required to deny it to acknowledge the shadow side of the age of wonder.

As I see it, the only real counterweight to techno-utopianism is religion. Religion is concerned with ultimate things, and demands that we weigh our human desires and actions against them. Scientists, the Promethean heroes, tend to chafe against any restriction on their curiosity -- which is why some of them (Dawkins, et alia) rage against religion. The best of humankind's religious traditions have been thinking about human nature for centuries, even millenia, and know something deep about who we are, and what we are capable of. How arrogant we are to think the Christian, the Jewish, the Islamic, the Taoist, and other sages have nothing important to say to us moderns! What religion speaks of is how to live responsibly in the world. Here is Wendell Berry, from his great book "Life Is a Miracle: An Essay Against Modern Superstition":

It should be fairly clear that a culture has taken a downward step when it forsakes the always difficult artistry that renews what is neither new nor old and replaces it with an artistry that merely exploits what is fashionably or adventitiously "new," or merely displays the "originality" of the artist.

Scientists who believe that "original discovery is everything" justify their work by the "freedom of scientific inquiry," just as would-be originators and innovators in the literary culture justify their work by the "freedom of speech" or "academic freedom." Ambition in the arts adn the sciences, for several generations now, has conventionally surrounded itself by talk of freedom. But surely it is no dispraise of freedom to point out that it does not exist spontaneously or alone. The hard and binding requirement that freedom must answer, if it is to last, or if in any meaningful sense it is to exist, is that of responsibility. For a long time the originators and innovators of the two cultures have made extravagant use of freedom, and in the process have built up a large debt to responsibility, little of which has been paid, and for most of which there is not even a promissory note.

Berry goes on:

On the day after Hitler's troops marched into Prague, the Scottish poet Edwin Muir, then living in that city, wrote in his journal ... : "Think of all the native tribes and peoples, all the simple indigenous forms of life which Britain trampled upon, corrupted, destroyed ... in the name of commercial progress. All these things, once valuable, once human, are now dead and rotten. The nineteenth century thought that machinery was a moral force and would make men better. How could the steam-engine make men better? Hitler marching into Prague is connected to all this. If I look back over the last hundred years it seems to me that we have lost more than we have gained, that what we have lost was valuable, and that what we have gained is trifling, for what we have lost was old and what we have gained is merely new."

What Berry identifies as "superstition" is the belief that science can explain all things, and tells us all we need to know about life and how to live it. In other words, the superstitious belief in science as religion. He is not against science; he only wishes for science to know its place, to accept boundaries. He writes:

It is not easily dismissable that virtually from the beginning of the progress of science-technology-and-industry that we call the Industrial Revolution, while some have been confidently predicting that science, gonig ahead as it has gone, would solve all problems and answer all questions, others have been in mourning. Among these mourners have been people of the highest intelligence and education, who were speaking, not from nostalgia or reaction or superstitious dread, but from knowledge, hard thought, and the promptings of culture.

What were they afraid of? What were their "deep-set repugnances"? What did they mourn? Without exception, I think, what they feared, what they found repugnant, was the violation of life by an oversimplifying, feelingless utilitarianism; they feared the destruction of the living integrity of creatures, places, communities, cultures, and human souls; they feared the loss of the old prescriptive definition of humankind, according to which we are neither gods nor beasts, though partaking of the nature of both. What they mourned was the progressive death of the earth.

This, in the end, is why science and religion have to engage each other seriously. Without each other, both live in darkness, and the destruction each is capable of is terrifying to contemplate -- although I daresay you will not find a monk or a rabbi prescribing altering the genetic code of living organisms for the sake of mankind's artistic amusement. What troubles me, and troubles me greatly, about the techno-utopians who hail a New Age of Wonder is their optimism uncut by any sense of reality, which is to say, of human history. In the end, what you think of the idea of a New Age of Wonder depends on what you think of human nature. I give better than even odds that this era of biology and computers identified by Dyson and celebrated by the Edge folks will in the end turn out to have been at least as much a Dark Age as an era of Enlightenment. I hope I'm wrong. I don't think I will be wrong.

Read more: http://blog.beliefnet.com/roddreher/2010/03/a-new-age-of-wonder-really.html#ixzz16vIdDlHX

Michael Shermer, the libertarian-leaning skeptic and critical thinker who is as formidable and illustrious as he is implacable and indefatigable, lets his hair down in a paean to Bill Gates that is so fulsome I suspect it's a joke.

Describing a TED-related dinner organized last week in Long Beach by John Brockman, Shermer describes how the multibillionaire Microsoft founder wowed everybody at his table. (Imagine a man so brilliant he makes John Cusack seem like a minor league penseur by comparison.)

Describing a TED-related dinner organized last week in Long Beach by John Brockman, Shermer describes how the multibillionaire Microsoft founder wowed everybody at his table. (Imagine a man so brilliant he makes John Cusack seem like a minor league penseur by comparison.)

I understand -- believe me I really understand -- how wealth or success or hotness can make even the most trivial expulsions seem like nuggets of pangalactic genius. But while Gates' pronouncements on the economy offer something to please enemies of the ARRA stimulus package and plenty to infuriate enemies of the bank bailout, what unites his comments is their sheer heard-it-a-million-times-before banality:

I asked Gates “Isn’t it a myth that some companies are ‘too big to fail’? What would have happened if the government just let AIG and the others collapse.” Gates’ answer: “Apocalypse.” He then expanded on that, explaining that after talking to his “good friend Warren” (Buffet), he came to the conclusion that the consequences down the line of not bailing out these giant banks would have left the entire world economy in tatters.

Arianna Huffington asked Gates about Obama’s various jobs programs to stimulate the economy. Gates answered: “Let me tell you about what leads companies to create more jobs: demand for their products. My friend Warren owns the world’s largest carpet manufacturing company. Their business has dried up because the demand for carpets has declined dramatically due to the drop off in the construction of new homes and office buildings. If you want to create more jobs you need to create more demand for products that the jobs are created to fulfill. You can’t just make up jobs without a real demand for them.” I believe that was the last thing Arianna said for the evening.

This led me to ask Gates this: “If the market is so good at determining jobs and wages and prices, why not let the market determine the price of money? Why do we need the Fed?” Gates responded: “You sound like Ron Paul! We need the Fed to steer the economy away from extremes of inflation and deflation.” He then schooled us with a mini-lecture on the history of economics (again, probably gleaned from Timothy Taylor’s marvelous course for the Teaching Company on the economy history of the United States) to demonstrate what happens when fluctuations in the price of money (interest rates, etc) swing too wildly. I believe that was the last question I asked Gates for the evening!

It's like that acid trip where you were just centimeters away from figuring out the whole universe! As noted above, I suspect this is all just a wheeze by Shermer, and if so I apologize for stepping on his joke. But in the interest of everybody who thinks Planet Earth would have survived if Goldman Sachs had been allowed to go out of business (and for reasons I'm happy to go into at greater length, Goldman Sachs would not have gone out of business even under the worst-case scenario), I'd like to point out something to Bill Gates:

It's 2010 now. If you're still trying to justify Hank Paulson's miserable experiment in lemon socialism, you're gonna need more synonyms for "apocalypse," "chaos," "meltdown" and "armageddon." They're definitely out there: I looked them up using Microsoft Word's thesaurus function.

Ladies and gentlemen, Chauncey Gardiner:

Some people whose names you may know or computers you may have used all had dinner together last week.

Photo above: Apocalyptic shit-disturber John Cusack eats the final grape at the namedrop alpha table, drawing heated commentary from Microsoft Chairman Bill Gates, who sources say did not get a single grape.

(L-R, for reals, EDGE 2010 dinner: Jared Cohen, US State Department; Dave Morin, Facebook; John Cusack, actor/writer/director/thinker; Dean Kamen, Inventor, Deka Research; Bill Gates, Microsoft, Gates Foundation; Arianna Huffington, The Huffington Post; Michael Shermer, Skeptic Magazine. Not shown in this photo, but huddled around the same table, were Peter Diamandis, George Church, and me. )

Here's the photo gallery for this dinner, hosted by John Brockman and EDGE to herald "the Dawing of the Age of Biology." Let the jpeg record show that I managed to get up close and personal withMarissa Mayer and Nathan Wolfe, then later with Danny Hillis.

More about the big ideas discussed, after the jump.

John Brockman, in presenting the theme for this 2010 edition of the annual EDGE dinner, wrote:

In the summer of 2009, in a talk at the Bristol (UK) Festival of Ideas, physicist Freeman Dyson articulated a vision for the future. He referenced The Age Of Wonder, by Richard Holmes, in which the first Romantic Age described by Holmes was centered on chemistry and poetry, while Dyson pointed out that this new age is dominated by computational biology. Its leaders, he noted, include "biology wizards" Kary Mullis, Craig Venter, medical engineer Dean Kamen; and "computer wizards" Larry Page and Sergey Brin, and Charles Simonyi. He pointed out that the nexus for this intellectual activity — the Lunar Society for the 21st century — is centered around the activities of Edge.

All the scientists mentioned above by Dyson (with the exception of Simonyi) were present at the dinner. Others guests who are playing "a significant role in this new age of wonder through their scientific research, enlightened philanthropy, and entrepreneurial initiative" included Larry Brilliant,George Church, Bill Gates, Danny Hillis, Nathan Myhrvold, Jeff Skoll, and Nathan Wolfe.

di ALDO FORBICE

La casualità regola la nostra vita anche se la grande maggioranza degli esseri umani non ne è convinta. Lo hanno sempre affermato gli scienziati. Chi dedica un saggio a questo tema, tutt'altro che marginale, è Leonard Mlodinow, La passeggiata dell'ubriaco (Rizzoli). L'autore, che dopo il dottorato conseguito a Berkeley, insegna all'Università di Caltech, sostiene che la casualità ci insegue per tutta la vita: dalle aule dei tribunali ai tavoli della roulette, dai quiz televisivi, al destino degli ebrei nel ghetto di Varsavia. Ogni evento della storia e delle nostre vite è segnato dal caso. Eppure la maggioranza delle persone pensa che il destino sia «segnato» da Dio o da altre autorità sovrannaturali. Per dimostrare la casualità di ogni evento l'autore racconta numerose storie di casualità. Eccone una fra la le tante: un aspirante attore lavorava per vivere in un bar a Manhattan, per diversi anni è stato costretto a dormire in una topaia, riuscendo a fare qualche comparsata negli spot televisivi. Poi, un giorno d'estate del 1984 andò a Los Angeles per assistere alle Olimpiadi e per caso incontrò un agente che gli propose un provino per un telefilm. Lo fece e ottenne il ruolo. Quell'attore si chiama Bruce Willis; così iniziò la sua carriera.

Il fisico Mlodinow, con il suo libro, ci fa compiere un viaggio affascinante nel mondo della casualità e della probabilità, restituendoci certezze e facendoci riflettere sulle nostre scelte, giuste o sbagliate che si siano rivelate. Si è trattato però di decisioni nostre, dettate dagli eventi casuali, e non certo preordinate o decise da fantasmi o da esseri sovrannaturali. In altre parole, «le leggi scientifiche del caso» esistono. Magari sono le stesse che i più, per ignoranza, per pigrizia mentale o solo per autoassolversi da errori commessi, continuano a definire «fortuna». Ma il futuro ha una direzione per ognuno di noi? Non interpelliamo maghi, fattucchiere od «oroscopari», ma scienziati. Lo ha fatto Max Brockman, un agente letterario che si occupa di divulgazione scientifica: ha invitato 18 giovani scienziati a scrivere altrettanti saggi che ora sono stati raccolti in un libro, Scienza. Next Generation (il Saggiatore). Questi giovani studiosi cercano di dare risposte a domande come queste: che direzione vogliamo dare al futuro?, che cosa sta cercando di dirci l'universo?, come possiamo migliorare gli esseri umani?, quanto è importante l'immaginazione?, l'homo sapiens è destinato a estinguersi?, e così via. Le risposte tengono conto dei dati e delle conoscenze scientifiche, cercando di interpretare le grandi linee della scienza che verrà.

«Il cielo stellato sopra di me, e la legge morale dentro di me», ha scritto il grande filosofo Immanuel Kant. A questa massima si rifà il fisico Andrea Frosa (insegna fisica generale all'Università «La Sapienza» di Roma), che ha scritto Il Cosmo e il Buondio-Dialogo su astronomia, evoluzione e mito (Bur-Rizzoli). L'autore immagina che la Terra stia per essere colpita da un cataclisma naturale e l'Onnipotente decida di occuparsi dell'umanità che per lui «rappresenta una insignificante briciola di un cosmo infinito e multiforme». Consulta quindi i grandi pensatori di tutti i tempi (da Pitagora a Newton, da Democrito a Galileo e Laplace, da Aristarco a Einstein e Hubble, da Aristotele a Darwin, oltre ad alcuni scienziati viventi) invitandoli ad aiutarlo. L'autore, a 400 anni dalle scoperte di Galileo e Keplero, racconta in questo modo l'affascinante avventura millenaria sulle grandi questioni dell'universo, senza mai cessare di interrogare la ragione sulle nostre origini e il nostro destino. Di scienza, com'è noto, si occupano anche i filosofi. Lo fa anche Ernesto Paolozzi (docente di filosofia contemporanea all'Università di Napoli) col saggio La bioetica, per decidere della nostra vita (Christan Marinotti edizioni).

La moderna bioetica dà risposte sulla vita, la morte e la qualità della vita, invadendo i campi della filosofia, della scienza, del diritto e della religione. L'autore cerca di fare chiarezza su una materia complessa, al centro di dibattiti e infuocate polemiche, cercando di ricondurre la bioetica allo spirito originale del suo fondatore, V.R.Potter, che la considerava, come oggi Edgar Morin, «un ponte verso il futuro», cioè la disciplina che pone al centro del proprio interesse il destino della Terra, di tutta la biosfera. Un tema questo, sicuramente affascinante, di cui si discuterà (e si polemizzerà) ancora a lungo, ma che fa parte integrante del futuro dell'umanità.

Ve lo ricordate il proverbiale stereotipo dello scienziato svitato, con la testa fra le nuvole, arroccato nel suo laboratorio alle prese con formule misteriose? Bene, ha fatto anch’esso la fine delle cabine del telefono o dei videoregistratori, definitivamente disperso nel tempestoso oceano del mutamento. Fino a ieri disciplina austera, fredda e lontana, ora con una travolgente metamorfosi la scienza si è imprevedibilmente fatta pop, è diventata calda e comunicativa, si è venuta umanizzando.

Ve lo ricordate il proverbiale stereotipo dell’atleta forte bello e imbecille e del nerd geniale ma inguardabile e snobbato dalle ragazze? Ecco, se ve lo ricordate questo è il momento di scordarvelo. Quello che da più parti è stato proclamato il nuovo Einstein è un ragazzone californiano che si chiama Garrett Lisi e che ha scritto la sua “eccezionalmente semplice teoria del tutto” destinata a mutare per sempre i paradigmi della fisica non in biblioteca o in accademia ma sulle spiagge dove vive facendo surf. C’è un nuovo prototipo di scienziato che non soltanto non si sottrae alle lusinghe del mondo - almeno non a quelle più appassionanti - ma anzi se ne serve per nutrire ed allargare la propria ricerca.

Se vi avventurate fra le conferenze video di Ted, vero e proprio brain trust dell’innovazione, se vi tuffate in quella inesauribile sorgente di idee che sono i libri molteplici e corali curati da John Brockman (in particolare Scienza, next generation), non avrete più dubbi: la scienza non soltanto si è impossessata del dibattito filosofico, ma sta riscrivendo le mappe del nostro immaginario. C’è nella scienza una forza comunicativa ed energetica che non ne vuole più sapere di stare dentro i vecchi confini e le vecchie categorie.

In questa nostra epoca, biologia, genetica, chimica, fisica si stanno allargando in tutte le direzioni, in una lussureggiante varietà di nuovi saperi, e questo è il segno inequivocabile che la scienza sta vivendo una fase assolutamente espansiva ed evolutiva. Senza più specialismi autoreferenziali, senza più mediazioni, la ricerca scientifica sta mettendo al centro di se stessa e della nostra sensibilità la questione dell’estensione dell’esistenza umana. Della nostra vita sta evidenziando non più soltanto i limiti - come epressivamente fanno tutti i sistemi di pensiero convenzionali - ma innanzitutto le risorse, la potenza. Ci sarà molto da divertirci, credetemi.

Having spent many a column espousing the wonders of the internet, my final column will sound a warning on the dangers.

The first is anonymity. This can be a curse and a blessing online. Sites such as Wikileaks – which desperately needs funding to stay open – provide a valuable place where information can be put into the public domain anonymously.

But there is a flip side. Glance at the comments below any newspaper opinion article and you will be given a whirlwind tour of the most unpleasant aspects of the public psyche.

You don’t overhear conversations such as this in the pub or at work. It’s the anonymity that provides people with the cover to get away with such language without being hit in the face.

And it’s the anonymity the internet provides that allows authoritarian regimes to spy on businesses without the state being held responsible.

It allows criminals to perpetrate scams online that cost the public billions of pounds a year, and illegal downloaders to ply their craft without fear of apprehension.

One of the original, long-abandoned arguments for ID cards was that they could provide a person with a secure online identity, so when you logged on to your bank’s web site, for example, you could prove your identity. If people were more accountable for their online interactions, they might be more civil – and law abiding.

But the internet carries an arguably more pervasive and long-term danger than the provision of anonymity and that is the way that it changes and shapes thinking and the way people interact with information.

The online forum edge.org recently tackled this problem. It asked leading scientists, technologists and thinkers: How is the internet changing the way you think? A number of people, including American writer Nicholas Carr and science historian George Dyson, outlined fears that the web is at risk of reducing serious thought rather than promoting it.

One argument posits that a more democratic approach – with everything posted online attributed an equal weight, whether right or wrong – encourages a cavalier attitude to the truth.

Another is that collecting information online reduces our attention span. We will scan a Wikipedia article on a subject, rather than read a book about it.

Furthermore, it is harder to distinguish between the relative value of sources online. Whereas in real life we would trust a professor more than a eight-year-old, online those boundaries are blurred by a lack of clear distinction between sources – both people would be able to type a comment on a site, and we have no way of knowing who they are, other than their words.

The “trust distinguishers” we use in the physical world are easier to fake online. Whereas a professor offline could be examined for reliability by his age, manner of speaking and so on, these things are easier to disguise on the web.

In many ways the internet represents many of the same problems as a democracy. By giving an equal voice to all, it empowers many of those who are disenfranchised economically or socially and who would not otherwise be heard.

But at the same time, it gives an equal voice to the extremities of society – making it easier for those who run extremist jihadi sites, or sites that deny the holocaust, to spread information.

Just as in real life, nobody should be denied freedom of speech online. The internet can and must be accessible to everyone. But we need to develop and teach new ways in which to distinguish between, and prioritise, information that we find online if we are to retain our notions of what is correct and incorrect.

Do you remember the proverbial stereotype of the scientist screwed with his head in the clouds, perched in his laboratory dealing with mysterious formulas? Well, he did also the end of the phone booths or video recorders, definitely missing in the stormy ocean of change. Until yesterday discipline austere, cold and distant, now with a sweeping metamorphosis science has made pop unexpectedly, has become warm and communicative, he came humanizing.

Do you remember the proverbial stereotype of the athlete looking strong and stupid and brilliant nerd but unwatchable and snubbed by girls?Well, if you remember this is the time to forget it. What, there have been proclaimed the new Einstein is a California boy named Garrett Lisi and who wrote his "exceptionally simple theory of everything" destined to change forever the paradigms of physics at the library or in the academy but surfing beaches where he lives. There is a new prototype of a scientist who not only does not avoid the lure of the world - at least not to the most exciting - but instead uses it to nourish and expand its research.

If you do venture between the conference video of Ted, a real brain trust of innovation, if you dive into that inexhaustible source of ideas that are multiple and choral books edited by John Brockman (particularly Science, next generation) will not have no doubt: science not only has taken possession of the philosophical debate, but is rewriting the map of our imagination. There is a force in science communication and energy that does not want to know more to stay within the old boundaries and the old categories.

In this modern age, biology, genetics, chemistry, physics are increasing in all directions, in a lush variety of new knowledge, and this is an unequivocal sign that science is experiencing a very expansive and evolutionary. No more self-specialisms, without mediation, scientific research is putting itself at the center of our feeling and the question of human existence. Of our life is not just pointing out the limits - such as send an explicit form all the conventional systems of thought - but above all the resources, power. There will be much fun, believe me.

The title of the book "153 reasons to be optimistic" (The Assayer) attracts at a time like ours, studded with worrying news of all kinds. But even more intriguing if one also reads the caption: "The great challenge of research." What promises? The question arises. The reasons are the answers gathered by John Brockman curator of 'work by many scientists working on the frontiers of science more extreme. Which I identify why, from their point of view and work, it is right to look positively at the future. A scientist must be an optimist by definition driven by 'enthusiasm to conquer something new. And the prospect to whom he dedicates his life is destined to bring the innovations that improve the lives of us all. The succession of short answers, but the content is impressive because he hears from the genome mapper Craig Venter (pictured) to Marvin Minsky that deals with immortality, by physicist Lee Smolin and Martin Rees on the energy challenge, by Freeman Dyson George Nobel Smoot. Many issues relate to general culture and society. All agree on one point: to show that the reason for optimism is absolutely true.(G. Ch.) REPRODUCTION RESERVED

Giovanni Caprara

"Comment l’internet transforme-t-il la façon dont vous pensez ?", telle était la grande question annuelle posée par la revue The Edge à quelque 170 experts, scientifiques, artistes et penseurs. Difficile d’en faire une synthèse, tant les contributions sont multiples et variées et souvent passionnantes. Que les répondants soient fans ou critiques de la révolution des technologies de l’information, en tout cas, il est clair qu’Internet ne laisse personne indifférent.

L’INTERNET CHANGE LA FAÇON DONT ON VIT L’EXPÉRIENCE

Pour les artistes Eric Fischl et April Gornik, l’Internet a changé la façon dont ils posent leur regard sur le monde. "Pour des artistes, la vue est essentielle à la pensée. Elle organise l’information et permet de développer des pensées et des sentiments. La vue c’est la manière dont on se connecte." Pour eux, le changement repose surtout sur les images et l’information visuelle ou plus précisément sur la perte de différenciation entre les matériaux et le processus : toutes les informations d’ordres visuelles, quelles qu’elles soient, se ressemblent. L’information visuelle se base désormais sur des images isolées qui créent une fausse illusion de la connaissance et de l’expérience.

"Comme le montrait John Berger, la nature de la photographie est un objet de mémoire qui nous permet d’oublier. Peut-être peut-on dire quelque chose de similaire à propos de l’internet. En ce qui concerne l’art, l’internet étend le réseau de reproduction qui remplace la façon dont on fait l’expérience de quelque chose. Il remplace l’expérience par le fac-similé."

Le jugement de Brian Eno, le producteur, est assez proche. "Je note que l’idée de l’expert a changé. Un expert a longtemps été quelqu’un qui avait accès à certaines informations. Désormais, depuis que tant d’information est disponible à tous, l’expert est devenu quelqu’un doté d’un meilleur sens d’interprétation. Le jugement a remplacé l’accès." Pour lui également, l’internet a transformé notre rapport à l’expérience authentique (l’expérience singulière dont on profite sans médiation). "Je remarque que plus d’attention est donnée par les créateurs aux aspects de leurs travaux qui ne peuvent pas être dupliqués. L’authentique a remplacé le reproductible."

Pour Linda Stone : "Plus je l’ai appréciée et connue, plus évident a été le contraste, plus intense a été la tension entre la vie physique et la vie virtuelle. L’internet m’a volé mon corps qui est devenu une forme inerte courbée devant un écran lumineux. Mes sens s’engourdissaient à mesure que mon esprit avide fusionnait avec le cerveau global". Un contraste qui a ramené Linda Stone à mieux apprécier les plaisirs du monde physique. "Je passe maintenant avec plus de détermination entre chacun de ces mondes, choisissant l’un, puis l’autre, ne cédant à aucun."

LE POUVOIR DE LA CONVERSATION

Pour la philosophe Gloria Origgi (blog), chercheuse à l’Institut Nicod à Paris :l’internet révèle le pouvoir de la conversation, à l’image de ces innombrables échanges par mails qui ont envahi nos existences. L’occasion pour la philosophe de rappeler combien le dialogue permet de penser et construire des connaissances. "Quelle est la différence entre l’état contemplatif que nous avons devant une page blanche et les échanges excités que nous avons par l’intermédiaire de Gmail ou Skype avec un collègue qui vit dans une autre partie du monde ?" Très peu, répond la chercheuse. Les articles et les livres que l’on publie sont des conversations au ralenti. "L’internet nous permet de penser et d’écrire d’une manière beaucoup plus naturelle que celle imposée par la tradition de la culture de l’écrit : la dimension dialogique de notre réflexion est maintenant renforcée par des échanges continus et liquides". Reste que nous avons souvent le sentiment, coupable, de gaspiller notre temps dans ces échanges, sauf à nous "engager dans des conversations intéressantes et bien articulées". C’est à nous de faire un usage responsable de nos compétences en conversation. "Je vois cela comme une amélioration de notre façon d’extérioriser notre façon de penser : une façon beaucoup plus naturelle d’être intelligent dans un monde social."

Pour Yochaï Benkler, professeur à Harvard et auteur de la Puissance des réseaux, le rôle de la conversation est essentiel. A priori, s’interroge le savant, l’internet n’a pas changé la manière dont notre cerveau accomplit certaines opérations. Mais en sommes-nous bien sûr ? Peut-être utilisons-nous moins des processus impliqués dans la mémoire à long terme ou ceux utilisés dans les routines quotidiennes, qui longtemps nous ont permis de mémoriser le savoir…

Mais n’étant pas un spécialiste du cerveau, Benkler préfère de beaucoup regarder "comment l’internet change la façon dont on pense le monde". Et là, force est de constater que l’internet, en nous connectant plus facilement à plus de personnes, permet d’accéder à de nouveaux niveaux de proximité ou d’éloignement selon des critères géographiques, sociaux, organisationnels ou institutionnels. Internet ajoute à cette transformation sociale un contexte "qui capte la transcription d’un très grand nombre de nos conversations", les rendant plus lisibles qu’elles ne l’étaient par le passé. Si nous interprétons la pensée comme un processus plus dialogique et dialectique que le cogito de Descartes, l’internet permet de nous parler en nous éloignant des cercles sociaux, géographiques et organisationnels qui pesaient sur nous et nous brancher sur de tout autres conversations que celles auxquelles on pouvait accéder jusqu’alors.

"Penser avec ces nouvelles capacités nécessite à la fois un nouveau type d’ouverture d’esprit, et une nouvelle forme de scepticisme", conclut-il. L’internet exige donc que nous prenions la posture du savant, celle du journaliste d’investigation et celle du critique des médias.

MONDIALISATION INTELLECTUELLE

Pour le neuroscientifique français, Stanislas Dehaene, auteur des Neurones de la lecture, l’internet est en train de révolutionner notre accès au savoir et plus encore notre notion du temps. Avec l’internet, les questions que pose le chercheur à ses collègues à l’autre bout du monde trouvent leurs réponses pendant la nuit, alors qu’il aurait fallu attendre plusieurs semaines auparavant. Ces projets qui ne dorment jamais ne sont pas rares, ils existent déjà : ils s’appellent Linux, Wikipédia, OLPC… Mais ce nouveau cycle temporel a sa contrepartie. C’est le turc mécanique d’Amazon, ces "tâches d’intelligences humaines", cet outsourcing qui n’apporte ni avantage, ni contrat, ni garantie à ceux qui y souscrivent. C’est le côté obscur de la mondialisation intellectuelle rendue possible par l’internet.

Pour Barry C. Smith, directeur de l’Institut de l’école de philosophie de l’université de Londres, internet est ambivalent. "Le privé est désormais public, le local global, l’information est devenue un divertissement, les consommateurs des producteurs, tout le monde est devenu expert"… Mais qu’ont apporté tous ces changements ? L’internet ne s’est pas développé hors le monde réel : il en consomme les ressources et en hérite des vices. On y trouve à la fois le bon, le fade, l’important, le trivial, le fascinant comme le repoussant. Face à l’accélération et l’explosion de l’information, notre désir de connaissance et notre soif à ne rien manquer nous poussent à grappiller "un petit peu de tout et à chercher des contenus prédigérés, concis, formatés provenant de sources fiables. Mes habitudes de lecture ont changé me rendant attentif à la forme de l’information. Il est devenu nécessaire de consommer des milliers de résumés de revues scientifiques, de faire sa propre recherche rapide pour scanner ce qui devrait être lu en détail. On se met à débattre au niveau des résumés. (…) Le vrai travail se faire ailleurs." Il y a un danger à penser que ce qui n’apporte pas de résultat à une requête sur l’internet n’existe pas, conclut le philosophe.

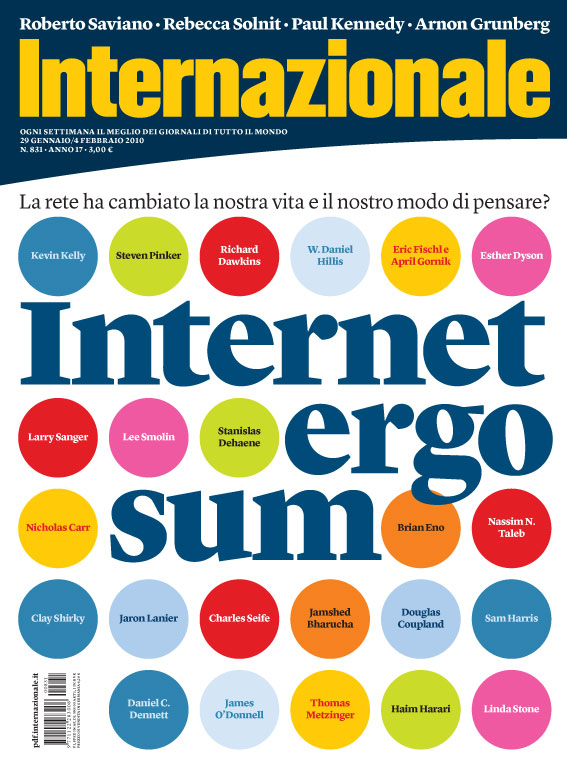

ON THE COVER

INTERNET ERGO SUM

The network has changed our

way of thinking? Meet artists, intellectuals and

Scientists around the world. From Kevin Kelly to Brian Eno, from

Richard Dawkins, to Clay Shirky, to Nicholas Carr

The new Apple iPad isn’t the half of it. The torrent of internet information is forcing us to change the way we think

In 1953, when the internet was not even a technological twinkle in the eye, the philosopher Isaiah Berlin famously divided thinkers into two categories: the hedgehog and the fox: "The fox knows many things, but the hedgehog knows one big thing.”

Hedgehog writers, argued Berlin, see the world through the prism of a single overriding idea, whereas foxes dart hither and thither, gathering inspiration from the widest variety of experiences and sources. Marx, Nietzsche and Plato were hedgehogs; Aristotle, Shakespeare and Berlin himself were foxes.

Today, feasting on the anarchic, ubiquitous, limitless and uncontrolled information cornucopia that is the web, we are all foxes. We browse and scavenge thoughts and influences, picking up what we want, discarding the rest, collecting, linking, hunting and gathering our information, social life and entertainment. The new Apple iPad is merely the latest step in the fusion of the human mind and the internet. This way of thinking is a direct threat to ideology. Indeed, perhaps the ultimate expression of hedgehog-thinking is totalitarian and fundamentalist, which explains why the regimes in China and Iran are so terrified of the internet. The hedgehogs rightly fear the foxes.

Edge (www.edge.org), a website dedicated to ideas and technology, recently asked scores of philosophers, scientists and scholars a simple but fundamental question: "How is the internet changing the way you think?” The responses were astonishingly varied, yet most agreed that the web had profoundly affected the way we gather our thoughts, if not the way we deploy that information.

If there's something that fascinates me about the digital age, it's the evolution of the human psyche as it adjusts to the rapid expansion of informational reach and acceleration of informational flow.

The Edge Foundation, Inc. poses one question a year to be batted around by deep thinkers, this year: How Is The Internet Changing The Way You Think? One contribution that's bound to infuriate those of us of an older persuasion (I'll raise my hand) came from Marissa Mayer, the V.P. for Search Products & User Experience at Google.

The Internet, she posits, has vanquished the once seemingly interminable search for knowledge.

"The Internet has put at the forefront resourcefulness and critical-thinking," writes Mayer, "and relegated memorization of rote facts to mental exercise or enjoyment." She says that we now understand things in an instant, concepts that pre-Web would have taken us months to figure out.

So, basically, you don't have to memorize the The Gettysburg Address anymore, you just have to Google it or link to it. But here's my question: If you can Google it, does that mean you really understand it?

A favorite writer of mine, Nicholas Carr, who deals with all matters digital, cultural and technological has a good answer to that question. In his reply to Marissa Mayer, Carr offers a potent analysis of the difference between knowing and meaning.

He uses (brace yourself) a critical exploration of Robert Frost's poetry by Richard Poirier —Robert Frost: The Work of Knowing— to challenge what I might call the Google mentality.

"It's not what you can find out," he wrote. "Frost and (William) James and Poirier told us, it's what you know."

Gathering facts is not the same as gathering knowledge.

If there's something that fascinates me about the digital age, it's the evolution of the human psyche as it adjusts to the rapid expansion of informational reach and acceleration of informational flow.

The Edge Foundation, Inc. poses one question a year to be batted around by deep thinkers, this year: How Is The Internet Changing The Way You Think? One contribution that's bound to infuriate those of us of an older persuasion (I'll raise my hand) came from Marissa Mayer, the V.P. for Search Products & User Experience at Google.

The Internet, she posits, has vanquished the once seemingly interminable search for knowledge.

"The Internet has put at the forefront resourcefulness and critical-thinking," writes Mayer, "and relegated memorization of rote facts to mental exercise or enjoyment." She says that we now understand things in an instant, concepts that pre-Web would have taken us months to figure out.

So, basically, you don't have to memorize the The Gettysburg Address anymore, you just have to Google it or link to it. But here's my question: If you can Google it, does that mean you really understand it?

A favorite writer of mine, Nicholas Carr, who deals with all matters digital, cultural and technological has a good answer to that question. In his reply to Marissa Mayer, Carr offers a potent analysis of the difference between knowing and meaning.

He uses (brace yourself) a critical exploration of Robert Frost's poetry by Richard Poirier —Robert Frost: The Work of Knowing— to challenge what I might call the Google mentality.

"It's not what you can find out," he wrote. "Frost and (William) James and Poirier told us, it's what you know."

Gathering facts is not the same as gathering knowledge.