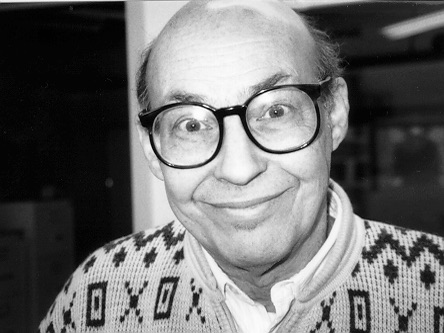

Remembering Minsky

Remembering Minsky

MARVIN MINSKY 1927-2016

Minsky's First Law

Words should be your servants, not your masters.

Minsky's Second Law

Don't just do something. Stand there

(From “What’s Your Law?” 2004)

"To say that the universe exists is silly, because it says that the universe is one of the things in the universe. So there's something wrong with questions like, "What caused the Universe to exist?"

Marvin Minsky, Stephen Jay Gould, Nicholas Humphrey, John Brockman,

Daniel C. Dennett @ Eastover Farm, August 1998: The birth of The Third Culture

THE REALITY CLUB: George Dyson, Ray Kurzweil, Rodney Brooks, Neil Gershenfeld, Daniel C. Dennett, Kevin Kelly, Jaron Lanier, Lee Smolin, Michael Hawley, Roger Schank, Brian Greene, Nicholas Negroponte, Pattie Maes, Gary Marcus, Sherry Turkle, Tod Machover, W. Daniel Hillis, Ed Boyden, Ken Forbus

From INTRODUCTION TO PART II: "A COLLECTION OF KLUDGES"

in THE THIRD CULTURE: BEYOND THE SCIENTIFIC REVOLUTION

By John Brockman [5.1.96]

Introduction

One of the central metaphors of the third culture is computation. The computer does computation and the mind does computation. To understand what makes birds fly, you may look at airplanes, because there are principles of flight and aerodynamics that apply to anything that flies. That is how the idea of computation figures into the new ways in which scientists are thinking about complicated systems.

At first, people who wanted to be scientific about the mind tried to treat it by looking for fundamentals, as in physics. We had waves of so-called mathematical psychology, and before that psychologists were trying to find a simple building block — an "atom" — with which to reconstruct the mind. That approach did not work. It turns out that minds, which are brains, are extremely complicated artifacts of natural selection, and as such they have many emergent properties that can best be understood from an engineering point of view.

We are also discovering that the world itself is very "kludgey"; it is made up of curious Rube Goldberg mechanisms that do cute tricks. This does not sit well with those who want science to be crystalline and precise, like Newton's pure mathematics. The idea that nature might be composed of Rube Goldberg machines is deeply offensive to people who have a strong esthetic drive — those who say that science must be beautiful, that it must be pure, that everything should be symmetrical and deducible from first principles. That esthetic has been a great motivating force in science, since Plato.

Counteracting it is the esthetic that emerges from this book — the esthetic that says the beauties of nature come from the interaction of mind-boggling complexities, and that it is complexity essentially most of the way down. The computational perspective — machines made out of machines made out of machines — is on the ascendant. There is a lot of talk about machines in this book.

Marvin Minsky is the leading light of AI — that is, artificial intelligence. He sees the brain as a myriad of structures. Scientists who, like Minsky, take the strong AI view believe that a computer model of the brain will be able to explain what we know of the brain's cognitive abilities. Minsky identifies consciousness with high-level, abstract thought, and believes that in principle machines can do everything a conscious human being can do.

"SMART MACHINES' — Marvin Minsky

Chapter 8 in THE THIRD CULTURE

By John Brockman [5.1.96]

Roger Schank: Marvin Minsky is the smartest person I've ever known. He's absolutely full of ideas, and he hasn't gotten one step slower or one step dumber. One of the things about Marvin that's really fantastic is that he never got too old. He's wonderfully childlike. I think that's a major factor explaining why he's such a good thinker. There are aspects of him I'd like to pattern myself after. Because what happens to some scientists is that they get full of their power and importance, and they lose track of how to think brilliant thoughts. That's never happened to Marvin.

Like everyone else, I think most of the time. But mostly I think about thinking. How do people recognize things? How do we make our decisions? How do we get our new ideas? How do we learn from experience? Of course, I don't think only about psychology. I like solving problems in other fields — engineering, mathematics, physics, and biology. But whenever a problem seems too hard, I start wondering why that problem seems so hard, and we're back again to psychology! Of course, we all use familiar self-help techniques, such as asking, "Am I representing the problem in an unsuitable way?" or "Am I trying to use an unsuitable method?" However, another way is to ask, "How would I make a machine to solve that kind of problem?"

A century ago, there would have been no way even to start thinking about making smart machines. Today, though, there are lots of good ideas about this. The trouble is, almost no one has thought enough about how to put all those ideas together. That's what I think about most of the time.

The technical field of research toward machine intelligence really started with the emergence in the 1940s of what was first called cybernetics. Soon this became a main concern of several different scientific fields, including computer science, neuropsychology, computational linguistics, control theory, cognitive psychology, artificial intelligence — and, more recently, the new fields called connectionism, virtual reality, intelligent agents, and artificial life.

Why are so many people now concerned with making machines that think and learn? It's clear that this is useful to do, because we already have so many machines that solve so many important and interesting problems. But I think we're motivated also by a negative reason: the sense that our traditional concepts about psychology are no longer serving us well enough. Psychology developed rapidly in the early years of this century, and produced many good theories about the periphery of psychology — notably, about certain aspects of perception, learning, and language. But experimental psychology never told us enough about issues of more central concern — about thinking, meaning, consciousness, or feeling. ... [Continue]

CONSCIOUSNESS IS A BIG SUITCASE

A Talk with Marvin Minsky [9.26.98]

Marvin Minsky, Alan Guth, Daniel C. Dennett, Rodney Brooks, Nicholas Humphrey, Lee Smolin

My goal is making machines that can think—by understanding how people think. One reason why we find this hard to do is because our old ideas about psychology are mostly wrong. Most words we use to describe our minds (like "consciousness", "learning", or "memory") are suitcase-like jumbles of different ideas. Those old ideas were formed long ago, before 'computer science' appeared. It was not until the 1950s that we began to develop better ways to help think about complex processes.

Computer science is not really about computers at all, but about ways to describe processes. As soon as those computers appeared, this became an urgent need. Soon after that we recognized that this was also what we'd need to describe the processes that might be involved in human thinking, reasoning, memory, and pattern recognition, etc.

JB: You say 1950, but wouldn't this be preceded by the ideas floating around the Macy Conferences in the '40s?

MM: Yes, indeed. Those new ideas were already starting to grow before computers created a more urgent need. Before programming languages, mathematicians such as Emil Post, Kurt Gödel, Alonzo Church, and Alan Turing already had many related ideas. In the 1940s these ideas began to spread, and the Macy Conference publications were the first to reach more of the technical public. In the same period, there were similar movements in psychology, as Sigmund Freud, Konrad Lorenz, Nikolaas Tinbergen, and Jean Piaget also tried to imagine advanced architectures for 'mental computation.' In the same period, in neurology, there were my own early mentors-Nicholas Rashevsky, Warren McCulloch and Walter Pitts, Norbert Wiener, and their followers-and all those new ideas began to coalesce under the name 'cybernetics.' Unfortunately, that new domain was mainly dominated by continuous mathematics and feedback theory. This made cybernetics slow to evolve more symbolic computational viewpoints, and the new field of Artificial Intelligence headed off to develop distinctly different kinds of psychological models.

JB: Gregory Bateson once said to me that the cybernetic idea was the most important idea since Jesus Christ.

MM: Well, surely it was extremely important in an evolutionary way. Cybernetics developed many ideas that were powerful enough to challenge the religious and vitalistic traditions that had for so long protected us from changing how we viewed ourselves. These changes were so radical as to undermine cybernetics itself. So much so that the next generation of computational pioneers-the ones who aimed more purposefully toward Artificial Intelligence-set much of cybernetics aside.

Let's get back to those suitcase-words (like intuition or consciousness) that all of us use to encapsulate our jumbled ideas about our minds. We use those words as suitcases in which to contain all sorts of mysteries that we can't yet explain. This in turn leads us to regard these as though they were "things" with no structures to analyze. I think this is what leads so many of us to the dogma of dualism-the idea that 'subjective' matters lie in a realm that experimental science can never reach. Many philosophers, even today, hold the strange idea that there could be a machine that works and behaves just like a brain, yet does not experience consciousness. If that were the case, then this would imply that subjective feelings do not result from the processes that occur inside brains. Therefore (so the argument goes) a feeling must be a nonphysical thing that has no causes or consequences. Surely, no such thing could ever be explained! ...[Continue]

THE EMOTION UNIVERSE

A Talk with Marvin Minsky [9.16.02]

|

|

To say that the universe exists is silly, because it says that the universe is one of the things in the universe. So there's something wrong with questions like, "What caused the Universe to exist?

I was listening to this group talking about universes, and it seems to me there's one possibility that's so simple that people don't discuss it. Certainly a question that occurs in all religions is, "Who created the universe, and why? And what's it for?" But something is wrong with such questions because they make extra hypotheses that don't make sense. When you say that X exists, you're saying that X is in the Universe. It's all right to say, "this glass of water exists" because that's the same as "This glass is in the Universe." But to say that the universe exists is silly, because it says that the universe is one of the things in the universe. So there's something wrong with questions like, "What caused the Universe to exist?"

The only way I can see to make sense of this is to adopt the famous "many-worlds theory" which says that there are many "possible universes" and that there is nothing distinguished or unique about the one that we are in - except that it is the one we are in. In other words, there's no need to think that our world 'exists'; instead, think of it as like a computer game, and consider the following sequence of 'Theories of It":

(1) Imagine that somewhere there is a computer that simulates a certain World, in which some simulated people evolve. Eventually, when these become smart, one of those persons asks the others, "What caused this particular World to exist, and why are we in it?" But of course that World doesn't 'really exist' because it is only a simulation.

(2) Then it might occur to one of those people that, perhaps, they are part of a simulation. Then that person might go on to ask, "Who wrote the Program that simulates us, and who made the Computer that runs that Program?"

(3) But then someone else could argue that, "Perhaps there is no Computer at all. Only the Program needs to exist - because once that Program is written, then this will determine everything that will happen in that simulation. After all, once the computer and program have been described (along with some set of initial conditions) this will explain the entire World, including all its inhabitants, and everything that will happen to them. So the only real question is what is that program and who wrote it, and why"

(4) Finally another one of those 'people' observes, "No one needs to write it at all! It is just one of 'all possible computations!' No one has to write it down. No one even has to think of it! So long as it is 'possible in principle,' then people in that Universe will think and believe that they exist!'

So we have to conclude that it doesn't make sense to ask about why this world exists. However, there still remain other good questions to ask, about how this particular Universe works. For example, we know a lot about ourselves - in particular, about how we evolved - and we can see that, for this to occur, the 'program' that produced us must have certain kinds of properties. For example, there cannot be structures that evolve (that is, in the Darwinian way) unless there can be some structures that can make mutated copies of themselves; this means that some things must be stable enough to have some persistent properties. Something like molecules that last long enough, etc. ...[Continue]

A Conversation: THE NEW HUMANISTS:

Daniel C. Dennett, Marvin Minsky, and John Brockman

[9.18.03]

Part 1

Part 2

NEW PROSPECTS OF IMMORTALITY

by Marvin Minsky

From: "What Are You Optimistic About?", 2007

Benjamin Franklin: I wish it were possible... to invent a method of embalming drowned persons, in such a manner that they might be recalled to life at any period, however distant; for having a very ardent desire to see and observe the state of America a hundred years hence, I should prefer to an ordinary death, being immersed with a few friends in a cask of Madeira, until that time, then to be recalled to life by the solar warmth of my dear country! But... in all probability, we live in a century too little advanced, and too near the infancy of science, to see such an art brought in our time to its perfection.

—Letter to Jacques Dubourg, April 1773

Eternal life may come within our reach once we understand enough about how our knowledge and mental processes are embodied in our brains. For then we should be able to duplicate that information — and then into more robust machinery. This might be possible late in this century, in view of how much we are learning about how human brains work — and the growth of computer capacities.

However, this could have been possible long ago if the progress of science had not succumbed to the spread of monotheistic religions. For as early as 250 BCE, Archimedes was well on the way toward modern physics and calculus. So in an alternate version of history (in which the pursuit of science did not decline) just a few more centuries could have allowed the likes of Newton, Maxwell, Gauss, and Pasteur to anticipate our present state of knowledge about physics, mathematics, and biology. Then perhaps by 300 AD we could have learned so much about the mechanics of minds that citizens could decide on the lengths of their lives.

I'm sure that not all scholars would agree that religion retarded the progress of science. However, the above scenario seems to suggest that Pascal was wrong when he concluded that only faith could offer salvation. For if science had not lost those millennia, we might be already be able to transfer our minds into our machines. If so, then you could rightly complain that religions have deprived you of the option of having an afterlife!

Do we really want to lengthen our lives?

Woody Allen: I don't want to achieve immortality through my work. I want to achieve it through not dying.

What can one conclude from this? Perhaps some of those persons lived with a sense that they did not deserve to live so long. Perhaps others did not regard themselves as having worthy long term goals. In any case, I find it worrisome that so many of our citizens are resigned to die. A planetful of people who feel that they do not have much to lose: surely this could be dangerous. (I neglected to ask the religious ones why perpetual heaven would be less boring.)

However, my scientist friends showed few such concerns: "There are countless things that I want to find out, and so many problems I want to solve, that I could use many centuries." I'll grant that religious beliefs can bring mental relief and emotional peace—but I question whether these, alone, should be seen as commendable long-term goals.

The quality of extended lives

Anatole France: The average man, who does not know what to do with his life, wants another one which will last forever.

Certainly, immortality would seem unattractive if it meant endless infirmity, debility, and dependency upon others—but here we'll assume a state of perfect health. A somewhat sounder concern might be that the old ones should die to make room for young ones with newer ideas. However, this leaves out the likelihood that are many important ideas that no human person could reach in, say, less than a few hundred well focused years. If so, then a limited lifespan might deprive us of great oceans of wisdom that no one can grasp.

In any case, such objections are shortsighted because, once we embody our minds in machines, we'll find ways to expand their capacities. You'll be able to edit your former mind, or merge it with parts of other minds — or develop completely new ways to think. Furthermore, our future technologies will no longer constrain us to think at the crawling pace of "real time." The events in our computers already proceed a millions times faster than those in our brain. To such beings, a minute might seem as long as a human year.

How could we download a human mind?

Today we are only beginning to understand the machinery of our human brains, but we already have many different theories about how those organs embody the processes that we call our minds. We often hear arguments about which of those different theories are right — but those often are the wrong questions to ask, because we know that every brain has hundreds of different specialized regions that work in different ways. I have suggested a dozen different ways in which our brains might represent our skill and memories. It could be many years before we know which structures and functions we'll need to reproduce.

(No such copies can yet be made today, so if you want immortality, your only present option is to have your brain preserved by a Cryonics company. However, improving this field still needs further research — but there is not enough funding for this today — although the same research is also needed for advancing the field of transplanting organs.)

Some writers have even suggested that, to make a working copy of a mind, one might have to include many small details about the connections among all the cells of a brain; if so, it would require an immense amount of machinery to simulate all those cells' chemistry. However, I suspect we'll need far less than that, because our nervous systems must have evolved to be insensitive to lower-level details; otherwise, our brains would rarely work.

Fortunately, we won't need to solve all those problems at once. For long before we are able to make complete "backups" of our personalities, this field of research will produce a great flood of ideas for adding new features and accessories to our existing brains. Then this may lead, through smaller steps, to replacing all parts of our bodies and brains — and thus repairing all the defects and flaws that make presently our lives so brief. And the more we learn about how our brains work, the more ways we will find to provide them with new abilities that never evolved in biology.

Marvin & me, at his daughter Margaret's wedding.

Marvin & me, at his daughter Margaret's wedding.