ON "ITERATED PRISONER’S DILEMMA CONTAINS STRATEGIES THAT DOMINATE ANY EVOLUTIONARY OPPONENT" by William H. Press and Freeman J. Dyson

1. CAPSULE SUMMARY

Executive summary: Robert Axelrod's 1980 tournaments of iterated prisoner's dilemma strategies have been condensed into the slogan, Don't be too clever, don't be unfair. Press and Dyson have shown that cleverness and unfairness triumph after all.

Longer version: As Press and Dyson themselves say, the prisoner's dilemma has been so intensively studied that you wouldn't think there's anything new and important to be discovered about it. That's just what they've done, and it's the most fundamental finding you could ask for, namely a competitively "better" strategy. It allows one player to score more points than the other.

How can there be a "better" strategy for a game where the two players' positions are interchangeable? Press and Dyson plausibly envision a contest between an "evolutionary" player (whom we might define as a pragmatist/empiricist/crowd-sourcer who favors the strategies that work best in practice) and a super-clever player using Press-Dyson's strategy. Press and Dyson describe an "extortionate" strategy that can, in effect, demand an unequal cut of the game's points and get it. An evolutionary-pragmatic opponent would find that his own interests are best served by adopting counterstrategies that enforce the unequal division.

Should the exploited player realize what is happening, and should he be willing to hurt himself in order to punish the extortionate player (something ruled out in game theory but very common with human beings), then the iterated prisoner's dilemma becomes an ultimatum game. That is, the exploited player is left to choose between gritting his teeth and accepting the unequal split—or punishing the exploiter and himself in the bargain.The prisoner's dilemma is both math and mythos. From its invention at the RAND Corporation in 1950, people have been drawing parallels to all sorts of social, political, and biological conflicts. The Press-Dyson finding directly challenges the two notions at the heart of the meta-PD mythos: (1) that you can't fool evolution, and (2) that the most successful strategies are fair strategies.

Both ideas go back to Robert Axelrod's tournaments, which found that the strategy known as Tit for Tat (TFT) performed best of all those submitted. TFT is basically the Golden Rule written by a corporate attorney: "I'll cooperate if you will, but if you defect I'll defect right back." Practically everyone who's ever written about the prisoner's dilemma has taken this as an affirmation of ethical values! The most successful strategy is cooperative and fair—or so we thought.

Point (1) is also loosely connected to such trending ideas as genetic algorithms and crowd-sourcing. The idea is that you don't try to learn what's going on (which may be impractical in a complex system); instead you try a lot of things and look at what works best. This is imagined to produce a good and robust result because you can't fool evolution. But the Press-Dyson extortionate strategy does fool evolution. That's its modus operandi.

This is a fascinating result mathematically and has many implications for those who care to draw them. I was reminded of the rhetoric over income inequality, and how it's apparently enforced by perceptions of self-interest even among the not-so-wealthy (I must plead guilty to finding topical parallels in the IPD, a sin that should perhaps be classed with drawing cartoons of dinosaurs contemporary with cave men.)

2. THE EVOLUTION OF EXTORTION—Questions equally addressed to both authors.

• As you say in the paper, the Iterated Prisoner's Dilemma (IPD) has been so extensively studied that a reasonable person might think there is nothing new and fundamental to learn. What first led you to conclude otherwise?

• You're telling us there's no Santa Claus—that the IPD is a tough game and sometimes tough strategies win. That's a different message than we got from Robert Axelrod's 1984 book, "The Evolution of Cooperation." Now you're bringing in a new concept: "extortionate" strategies. Can you explain how an IPD player can "extort" a higher score from his opponent?

• The hero of Axelrod's book is the strategy Tit for Tat (TFT). I find it interesting that TFT is closely related to your extortionate strategies (the white sheep of the family). Can you explain a little about that relationship and how extortionate strategies differ from TFT?

• A strong subtext in the popular coverage of the evolution of cooperation research was that it provided a mathematical justification for human values like cooperation and fair division. Were we wrong to draw sweeping meta-mathematical conclusions? Should be drawing different (darker?) conclusions from your result?

• Another popular credo that you're upsetting is "you can't fool evolution." We've gained a lot of respect for how powerful and subtle evolution is—both biological evolution and the many other kinds of viral propagation plus selection pressures we see in the human and digital realms. You're showing how to fake out evolution! Should we dial down our faith in evolution as an all-powerful optimizer?

• You would think that a player with a long memory would have an advantage—like a coach who has videos of all the opposing team's games and can pore over them to formulate his counterstrategy. But you show that it's the player with the shortest memory who sets the ground rules: he can only react to what he can remember. How did you come to this insight?

• One final comment, not a question. Your connection of prisoner's dilemma to the ultimatum game has almost has a yin-yang quality to it. Historically, the PD was supposed to be a case in which game theory applies to real life. The ultimatum game, on the other hand, is a classic counterexample, showing that people are not always rational maximizers and game theory doesn't apply when emotions are involved. I suspect that the mix of iterated cooperate/don't cooperate decisions with ultimatum game features is something common in the world. There are many cases where an exploited party is in a prison of self-interest (a spoofed fitness landscape) and must choose between the frying pan or the fire. I can see that in abusive marriages, terrorism, the U.S. Congressional deadlock, and the income inequality debate.

I interviewed several of the people who worked at the RAND Corporation at the time they devised the PD (1950), including PD co-inventor Melvin Dresher and game theorist Martin Shubik. Shubik told me that they had a running project of trying to capture "pathological phenomena" in simple games. I think the RAND crowd would have been amused to learn how extortion can be an element of the IPD.

—William Poundstone

WILLIAM PRESS RESPONDS TO WILLIAM POUNDSTONE

• As you say in the paper, the Iterated Prisoner's Dilemma (IPD) has been so extensively studied that a reasonable person might think there is nothing new and fundamental to learn. What first led you to conclude otherwise?

It’s an odd journey. I was originally wondering about a much more modest question that, annoyingly, I couldn’t find already answered in the Prisoner’s Dilemma literature. That question was: Are there any evolutionarily stable points in one-move-deep IPD? That is, are there any strategies for the players X and Y, depending only on the previous move, from which each would be hurt by defecting? As a computational scientist, my approach was to do a computer search – something a bit harder than it sounds, because the search space is big, and the desired property is subtle. I wrote what I thought was an elegant computer program, and set it about the search.

Now the odd thing kept happening: My program kept crashing, but at a different point in the strategy space each time. It took a while to recognize that all these points lay exactly on a plane, and that the reason that the program was crashing was because it was assuming the obvious “fact” that each player’s strategy affects his own score. Well, that isn’t a fact at all! The computer had found the first of what we call “zero-determinant (ZD) strategies”, and, indeed, I was able to prove that these all lie on a plane.

The story now becomes one of symbiosis between computer and human intelligence: The computer could find instances, but not generalize them. I knew that the exact complement of computer intelligence, as yin to yang, is Freeman-Dyson-intelligence. So I showed what I had to Freeman. A day later, he sent me an email with the general result, equations 1-7 in our paper, all worked out. These equations immediately expose all the ZD strategies, including the successful extortionate ones.

By the way, I still don’t know the answer to the original, modest question.

• You're telling us there's no Santa Claus—that the IPD is a tough game and sometimes tough strategies win. That's a different message than we got from Robert Axelrod's 1984 book, The Evolution of Cooperation. Now you're bringing in a new concept: "extortionate" strategies. Can you explain how an IPD player can "extort" a higher score from his opponent?

Only obliquely Clintonesque, I have to respond, “What does explain mean?” The successful extortionate strategies have been mathematically present in IPD from the moment that Axelrod defined the game they just went, seemingly, unnoticed. On a planet in another galaxy, seven million years ago, Axelrod-Prime independently invented the same IPD game. He (it?) was of a species several times more intelligent than Homo sapiens and so recognized immediately that, between sentient players, the IPD game is dominated by an obvious extortionate strategy. Hence, for Axelrod-Prime, IPD was just another instantiation of the well-studied Ultimatum Game. He (it?) thus never bothered to publish it.

•The hero of Axelrod's book is the strategy Tit for Tat (TFT). I find it interesting that TFT is closely related to your extortionate strategies (the white sheep of the family). Can you explain a little about that relationship and how extortionate strategies differ from TFT?

TFT is mathemagical! It is by no means instantly obvious (at least not to me) that simply aping your opponent’s previous play guarantees that in repeated play you’ll get the same score that he does; but the math works out this way. TFT is one limiting case of our extortionate ZD strategies. The extortionate ZD strategies have two adjustable parameters. One, which we call c (chi), is how much you want to extort. Chi greater than one means that you grab a disproportionately large score; chi less than one is generous and cedes an advantage to your opponent. There is another parameter that we call f (phi), whose interpretation is not so clear, but it can be set only within a finite range. TFT is the ZD strategy with chi equal to one, and phi at one extreme end of its range. If you keep chi equal 1 and move phi around, you get a whole set of strategies that are subtly different from TFT, but equally support cooperation. Stewart and Plotkin’s commentary on our paper shows that there are also ZD strategies that generalize the much studied GTFT (“generous tit for tat”) strategy.

• A strong subtext in the popular coverage of the evolution of cooperation research was that it provided a mathematical justification for human values like cooperation and fair division. Were we wrong to draw sweeping meta-mathematical conclusions? Should be drawing different (darker?) conclusions from your result?

It would be a shame if our paper became just another meme in service of Gordon Gekko’s “greed is good”. In fact, it embodies something much more hopeful, rather closer to (if the requirement is to match math to cliché) “trust but verify”. Once both players understand ZD, then each has the power to set the other’s score, independent of the other’s actions. This allows them to make an enforceable treaty, not possible using simpler strategies. If one player secretly defects from the treaty, his score (set by the other player) won’t improve. The treaty goes like this: We agree to set each other’s score to the maximum cooperative value. We further agree that, if my score goes down (which can only mean that you are mischievously violating the treaty), then it shall be lawful for me to lower your score by the same amount and for the same period of time, after which I will return it to the maximum cooperative value. And, of course, symmetrically for you.

This sounds a bit like TFT, but it is actually quite different. First, while TFT guarantees both players the same score, it does not guarantee them both the maximum score. In fact, both players playing TFT is an unstable situation allowing any possible (mutual) score, while the above “treaty” stably awards both players the maximum. Second, TFT plays out on a rapid, move-by-move timescale. There is no way to pause for reflection or negotiation without losing points irretrievably.The ZD treaty regime instead allows for a whole range of careful, deliberative negotiations. You can never change your own score by unilateral action, and you always retain the future ability to extract any specified penalty from your opponent. That is a world in which diplomacy trumps conflict.

• Another popular credo that you're upsetting is "you can't fool evolution." We've gained a lot of respect for how powerful and subtle evolution is—both biological evolution and the many other kinds of viral propagation plus selection pressures we see in the human and digital realms. You're showing how to fake out evolution! Should we dial down our faith in evolution as an all-powerful optimizer?

Yes, Virginia, you can fool evolution. People do it all the time, nowadays, with directed evolution experiments that fool microbes into doing unnatural things. The trick is to keep adjusting the environment so that the “more fit” organism is the one that bends most to our (unnatural) goal. So, it’s not a surprise that these tricks exist in principle. What is a surprise is that they are so easily exemplified, mathematically, in a game as simple as Iterated Prisoner’s Dilemma – and that this was mathematically obscure enough to escape notice. Do these tricks exist in all mathematical games? Do they exist in reallife competitive scenarios? When both players have a theory of mind (that is, are not just evolving to maximize their own score), are all games, in some deep way, actually Ultimatum Games? These now seem to be interesting questions.

• You would think that a player with a long memory would have an advantage—like a coach who has videos of all the opposing team's games and can pore over them to formulate his counterstrategy. But you show that it's the player with the shortest memory who sets the ground rules: he can only react to what he can remember. How did you come to this insight?

I got to this by a rather formal calculation (equations 18-19 in our paper, involving the statistical idea of “marginalization”), but economist and game theorist Drew Fudenberg has pointed out to us that it’s not really so surprising: In a "pure" repeated game there is no physical or informational link across plays of the game. So a player’s only reason to condition his play on the past is if his opponent does, too. It is trivial that if opponent uses no memory you don't need to either. This is just a generalization of that.

FREEMAN DYSON RESPONDS TO ALEXANDER STEWART AND JOSHUA PLOTKIN

I was quite ignorant about Prisoners' Dilemma until Bill Press started to educate me. I am still very skeptical about its use as a model for the evolution of cooperation. Everything has become much clearer since I saw the Commentary by Stewart and Plotkin. Their Figure 1, tabulating the results of various Prisoners' Dilemma strategies competing in an Axelrod tournament, is particularly illuminating. The main result of their Figure 1 is dramatic.

That is the extreme reversal of roles between Press's strategies ZDGTFT-2 and EXTORT-2 when we go from score to wins. The generous strategy ZDGFTF-2 scores high and wins low. The punitive strategy EXTORT-2 scores low and wins high. So the strategy that is best for outcome is worst for evolution, and vice versa.

Cooperation loses and defection wins. The ZD strategies confirm this conclusion and make it sharper.

My view of the evolution of cooperation is colored by my memories of childhood in England. In the English calendar there were two important days, Christmas and Guy Fawkes. Christmas was the festival of love and forgiveness. Guy Fawkes was the festival of hate and punishment. Both festivals were ritualized and commercialized, but their evolutionary roots were still alive. Guy Fawkes was the guy who tried to blow up the King and the Parliament in 1605 and was gruesomely punished by torture and burning. For the children, Christmas was boring and Guy Fawkes was fun. We were born with an innate reward system that finds joy in punishing cheaters. The system evolved to give cooperative tribes an advantage over non-cooperative tribes, using punishment to give cooperation an evolutionary advantage within the tribe. This double selection of tribes and individuals goes way beyond the Prisoners' Dilemma model.

___

Thanks, Bill Press, for your thoughtful response to Poundstone, and for including me in the story.

Yours sincerely,

Freeman

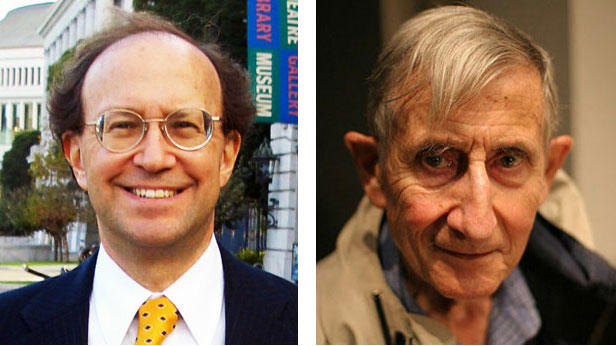

WILLIAM H. PRESS is a professor in Computer Sciences and Integrative Biology at the University of Texas at Austin. He is the current President of the American Academy of the Advancement of Science (AAAS) and a member of President's Council of Advisors on Science and Technology (PCAST).

William H. Press's Edge Bio Page

FREEMAN DYSON is professor of physics at the Institute for Advanced Study, in Princeton. His professional interests are in mathematics and astronomy. Among his many books are Disturbing the Universe, Infinite in All Directions Origins of Life, From Eros to Gaia, Imagined Worlds, The Sun, the Genome, and the Internet.

WILLIAM POUNDSTONE is a journalist and author, and has been nominated twice for the Pulitzer Prize. Among his books are Prisoner's Dilemma; The Recursive Universe; Big Secrets; Fortune's Formula; Gaming the Vote; Priceless; and most recently, Are You Smart Enough to Work at Google?

William Poundstone's Edge Bio Page

THE REALITY CLUB

A comment on Press-Dyson (PNAS)

Being close means not being there. We had known about strategies that allow to nail down the opponent's payoff to an arbitrary level [1,2], but not about the vast and fascinating realm of zero determinant (ZD) strategies that enforce a linear relation between the payoffs for the two players. This opens a new facet in the study of trigger strategies and folk theorems for iterated games, and offers a highly stimulating approach for moral philosophers enquiring about 'egoistic' and 'tuistic' viewpoints.

Our only quibble with the Press-Dyson paper is semantic. The title speaks of 'evolutionary opponents', which suggests evolutionary game theory. But biological or cultural evolution is not a phenomenon on the level of the individual. It requires a population. The 'evolutionary' players of Press and Dyson don't evolve but adapt. With their splendidly 'mischievous' extortionate strategies, Press and Dyson contribute to classical game theory, by considering two players who grapple with each other in a kind of mental jiu-jitsu. The leverage afforded by zero-determinant strategies offers a splendid new arsenal of throws, locks, and holds.

Which of these strategies can flourish in an evolutionary setting is less clear. Being successful, in this context, feeds back at the population level. It means that more and more players will act like you, be they your offspring or your epigones. Thus you are increasingly likely to encounter your own kind. If your 'extortionate' strategy guarantees that you do twice as well as your opponent, and your opponents' strategy guarantees that she does twice as well as you, this only means that both get nothing. The only norm which is not self-defeating through population dynamics requires players to guarantee each other as much as themselves. We are then back to Tit For Tat. Press and Dyson are perfectly aware of this, of course. In a nutshell, they have uncovered a vast set of strategies linking the scores of two players deterministically (as TFT does), but asymmetrically (unlike TFT). This enriches the canvas of individual interactions, but not necessarily the range of outcomes open to evolving populations.

[1] M.A. Nowak, M.C. Boerlijst, K.Sigmund, Equal pay for all prisoners, AMS Monthly 104 (1997) 303-307.

[2] K. Sigmund, The Calculus of Selfishness, Princeton UP, Princeton, New Jersey (2010).