BROOKS: The thing that puzzles me is that we've got all these biological metaphors that we're playing around with - artificial immunology systems, building robots that appear lifelike - but none of them come close to real biological systems in robustness and in performance. They look a little like it, but they're not really like biological systems. What I'm worrying about is that perhaps in looking at biological systems we're missing something that's always in there. You might be tempted to call it an essence of life, but I'm not talking about anything outside of biology or chemistry.

A good analogy is the idea of computation. Once Turing came up with a formalism for computation we were able make great progress fairly quickly. Now if you take any late 19th-century mathematicians, you could explain the fundamental ideas of computation to them in two or three days, lead them through the theorems, they could understand it and they wouldn't find it mind boggling in any way. It follows on from 19th-century mathematics. Once you have that notion of computation, you are able to do a lot with it. The question then is whether there something else, besides computation, in all life processes? We need an conceptual framework such as computation that doesn't involve any new physics or chemistry, a framework that gives us a different way of thinking about the stuff that's there. Maybe this is wishful thinking, but maybe there really is something that we're missing. We see the biological systems, we see how they operate, but we don't have the right explanatory modes to explain what's going on and therefore we can't reproduce all these sorts of biological processes. That to me right now is the deep question. The bad news is that it may not have an answer.

JB: How are you exploring it?

BROOKS: You can take a few different models. You can try to do it by analogy, you can try to see how people made leaps before and see if you can set yourself up in the right framework for that leap to happen. You can look at all the biological systems, look at different pieces that we're not able to replicate very well and look for some commonality. Or you can try to develop a mathematics of distributed systems, which all these biological systems seem to be. There are lots of little processes running with no central control, although they appear to have central control. I'm poking at all three.

JB: I've very little use for the digital technology metaphor as related to the human body.

BROOKS: The fact that we use the technology as a metaphor for ourselves really locks us into the way we think. We think about human intelligence as these neurons with these electrical signals. When I was a kid the brain was a telephone switching network, then it became a digital computer, then it became a massively parallel digital computer. I'm sure there's a book out there now for kids that says the brain is the World Wide Web, and everything's cross linked.

JB: In 1964 we used to talk about the mind we all share, the global utilities network.

BROOKS: We get stuck in that mode. Everyone starts thinking in those terms. I go to interdisciplinary science conferences and neuroscientists get up and say the brain is a computer and thoughts are software, and this leads people astray, because we understand that technical thing very well, but something very different is happening. We're at a point now where we're limited by those metaphors.

JB: How do you get beyond thinking about it that way?

BROOKS: The best I can hope for is to get another metaphor that leads us further, but it won't be the ultimate one either. So no, in no sense are we going to get to an absolute understanding.

JB: Do you have any hints as to what might be the way to go?

BROOKS: Inklings, but it's still a question—I don't have the answer. I don't know the next step. For the last 15 years I've been plodding along and doing things based on these metaphors—getting things distributed, and not having central control, and showing that we can really do stuff with that, we can build real systems. But there's probably something else that we're missing. That's the thing that puzzles me now and that I want to spend time figuring out. But I can't just sit and abstractly think about it. For me the thing I need to do is keep pushing and building on more complex systems, and then try to abstract out from that what is going on that makes those systems work or fail.

JB: Talk about what you're doing.

BROOKS: The central idea that I've been playing with for the last 12-15 years is that what we are and what biological systems are. It's not what's in the head, it's in their interaction with the world. You can't view it as the head, and the body hanging off the head, being directed by the brain, and the world being something else out there. It's a complete system, coupled together. This idea's been around a long time in various forms—Ross Ashby, in Design for Brain in the '50s—that's what the mathematics he was trying to develop was about. Putting that in the digital age has led to being able to get machines to do things with very little computation, frighteningly little computation, when we have our metaphors of digital computers. That we're claiming and showing that we can do things without lots of computation has gotten a lot of people upset, because it really is a mixture of computation and physics and placement in the world. Herb Simon talked about this back in Science of the Artificial, in 1966, or '68—his ant walking along the beach. The complexity of the path that the ant takes is forced on it by the grains of sand, not by the ant's intelligence. And he says on the same page, the same is probably true of humans—the complexity of their behavior is largely determined by the environment. But then he veers off and does crypto-arithmetic puzzles as the key to intelligence.

What we've been able to do is build robots that operate in the world, and operate in unstructured environments, and do pretty well, because they use whatever structure there is in the world to get the tasks done. And the hypothesis is that that's largely what humans are; that humans are not the centralized controllers. We're trying to implement this, and see what sorts of behavior we can get. It's like Society of Mind, which is coming top-down, and we're coming bottom-up, building with pieces and actually putting them together.

JB: How do you define the word "robot."

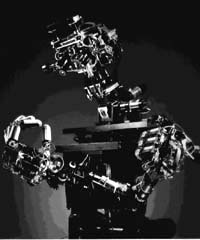

BROOKS: I mean a physical robot made of metal, not a soft-bot. Most recently I've been working on Cog, a humanoid robot which has human size, form, and sits and acts in the world. There are a few curious things that have come out of that which I didn't quite expect. One is that the system with very little content inside it, seems eerily human to people. We get its vision system running, with its eyes and its head moving, and it hears a sound and it saccades to that, and then the head moves to follow the eyes to get them back into roughly the center of their range of motion. When it does that, people feel it has a human presence. Even to the graduate students who designed the thing, and know that there's nothing in there, it feels eerily human.

The second thing is that people are starting to interact with it in a human like way. They will lead it on, so it will do something interesting in the world. People come up and play with it. And the robot is now doing things that we haven't programmed it to do; not that it's really doing them. The human is taking advantage of those little pieces of dynamics and leading it through a series of sub-interactions, so that to a naive observer it looks like the robot is doing a lot of stuff that it's not doing. The easiest example of this is turn-taking. One of my graduate students, Cynthia Ferrell, who had been the major designer of the robot, had been doing something with the robot so we could videotape how the arms interacted in physical space. When we looked at the videotape, Cynthia and it were taking turns playing with an whiteboard eraser, picking it up, putting it down, the two of them going back and forth. But we didn't put turn-taking into the robot, it was Cynthia pumping the available dynamics, much like a human mother leads a child through a series of things. The child is never as good as the human mother thinks it is. The mother keeps doing things with the kid, that the kid isn't quite capable of. She's using whatever little pieces of dynamics are there, getting them into this more complex behavior, and then the kid learns from that experience, and learns those behaviors. We found that humans can't help themselves; that's what they do with these systems such as kids and robots. The adults unconsciously put pieces of the kid's or robot's dynamics together without thinking. That was a surprise to us - that we didn't have to have a trained teacher for the robot. Humans just do that. So it seems to me that what makes us human, besides our genetic makeup, is this cultural transferral that keeps making us human, again and again, generation to generation.

Of course it's involved with genetics somehow, but it's missing from the great apes. Naturally raised chimpanzees are very different from chimpanzees that have been raised in human households. My hypothesis here is that the humans engage in this activity, and drag the chimpanzee up beyond the fixed point solution in chimpanzee space of chimpanzee to chimpanzee transfer of culture. They transfer a bit of human culture into that chimpanzee and pull him/her along to a slightly different level. The chimpanzees almost have the stuff there, but they don't quite have enough. But they have enough that humans can pull them along a bit further. And humans have enough of this stuff that now a self-satisfying set of equations gets transferred from generation to generation. Perhaps with better nurturing humans could be dragged a little further too.

JB: Let's talk about your background.

BROOKS: I have always been fascinated by building physical things and spent my childhood doing that. When I first came to MIT as a post-doc I got involved in what I call classical robotics, applying artificial intelligence to automating manufacturing. My earlier Ph.D. thesis was on getting computers to see 3-dimensional objects. At MIT I started to worry about how to move a robot arm around without collisions, and you had to have a model, you had to know where everything was. It built up more and more mathematical structures where the computations were just getting way out of hand - tons of symbolic algebra, in real time, to try and make some decision about moving the fingers around. And at some point it got to the point that I decided that it just couldn't be right. We had a very complex mathematical approach, but that couldn't be what was going on with animals moving their limbs about. Look at an insect, it can fly around and navigate with just a hundred thousand neurons. It can't be doing this very complex symbolic mathematical computations. There must be something different going on.

I was married at the time to a woman from Thailand, the mother of my three kids, and I got stuck in a house on stilts in a river in southern Thailand for a month, not allowed out of the house because it was supposedly dangerous outside, and the relatives couldn't speak a word of English. I sat in this house on stilts for a month, with no one talking to me because they were all gossiping in Thai the whole time. I had nothing to do but think and I realized that I had to invert the whole thing. Instead of having top-down decomposition into pieces that had sensing going into a world model, then the planner looks at that world model and builds a plan, then a task execution module takes steps of the plan one by one and almost blindly executes them, etc., what must be happening in biological systems is that sensing is connected to action very quickly. The connectivity diameter of the brain is only five or six neurons, if you view it as a graph, so there must be all these quick connections of sensing to action, and evolution must have built on having those early ones there doing something very simple, then evolution added more stuff. It didn't take it all apart at each step and rewire it, because you wouldn't be able to get from a viable creature to a viable creature at each generation. You can only do it by accretion, and modulation of existing neural circuitry. This idea came out of thinking of having sensors and actuators and having very quick connections between them, but lots of them. As a very approximate hand waving model of evolution, things get built up and accreted over time, and maybe new accretions interfere with the lower levels. That's how I came to this approach of using very low small amounts of computation in the systems that I build to operate in the real world.

JB: What happened after you had your epiphany?

BROOKS: We built our first robot, and it worked fantastically well. At the time there were just a very few mobile robots in the world; they all assumed a static environment, they would do some sensing, they would compute for 15 minutes, move a couple of feet, do some sensing, compute for 15 minutes, etc., and that on a mainframe computer. Our system ran on a tiny processor on board. The first day we switched it on the robot wandered around without hitting anything, people walked up to it, it avoided them, so immediately it was in a dynamic environment. We got all that for free, and this system was operating fantastically better than the conventional systems. But the reaction was that "this can't be right!" I gave the first talk on this at a robotics seminar held in Chantilly, France, and I've heard since that Georges Giralt head of robotics in France , and Ruzena Basczy, who at the time was head of the computer science department at the University of Pennsylvania, were sitting at the back of the room saying to each other, "what is this young boy doing, throwing away his career?" They have told me since that they thought I was nuts. They thought I'd gone off the deep end because I threw out all the mathematical modeling, all the geometric reconstruction by saying you can do it directly, connecting sensors to actuators. I had videotape showing the thing working better than any other robot at the time. But the reaction was, it can't be right. It's a parlor trick, this won't work anywhere else, this is just a one-shot thing.

JB: What do biologists think?

BROOKS: Largely the reaction from biologists and ethologists is that this is obvious, this is the way to do it, how could anyone have thought to do it differently? I had my strongest support early on from biologists and ethologists. Now it's become mainstream in the following weak sense. The new classical AI approach is, you've got this Brooks-like going on down at the bottom, and then you have the standard old AI system sitting on top modulating its behavior. Before there was the standard AI system controlling everything; now this new stuff has crept in below. Of course, I say that you just have this new stuff, and nothing else. The standard methodology is you have both right now. So everyone uses my approach, or some variation, at the bottom level now. Pathfinder on Mars is using that at the bottom level. I want to push this all the way, and people are sort of holding onto the old approach on top of it, because that's what they know how to deal with.

JB: Where is this going to go?

BROOKS: Certainly these approaches are going to get out there into the real world, and will be in consumer products within a very small number of years. And it's going to come through the toy industry because that's already happening.

JB: What kind of consumer applications?

BROOKS: Things that appear frivolous. Let me give you an analogy on the frivolousness of things. Imagine you've got a time machine and you go back to the Moore School, University of Pennsylvania, around 1950, and they've spent 4 million dollars in four years and they've got Eniac working. And you say, by the way, in less than fifty years you'll be able to buy a computer with the equivalent power to this for 40 cents. The engineers would look at you like you were crazy, this whole thing with 18,000 vacuum tubes, for 40 cents? Then if they asked you what will people use these computers for? You say "Oh, to tell the time." Totally frivolous. How could you be so crazy as to have such a thing of complexity, such a tool telling the time. It's the same thing in consumer products. What it relies on is getting a variety of components that you can plug together to rapidly build up many different frivoulous products, by just adding a little bit of bottom up intelligence. For instance, one component is being able to track where a person is. With that you can have a thing that knows where you are in the room and dynamically adjusts the balance in your stereo system for you. If you're in the room you always have perfect stereo. Or it's attached to a motor and it's a vanity mirror that follows you around in the bathroom, so it's always at exactly the right angle for you to see your face. For kids they can set up a guard for their bedroom such that when someone comes by it shoots them with a water pistol. Once the components are available there'll be lots of other frivolous applications. But that's what's going to be what's there and what people will buy.