Toward the end of this morning, we talked about what second generation forecasting tournaments might look like and how the focus might shift. We’re still concerned with accuracy, but we’re also going to be concerned with the probative value of the questions that we’re posing about the world and how we can learn to pose questions that have the potential to tip important policy debates one direction or another. That’s tricky because forecasting tournament questions have to be rigorously resolvable, which means they have to be micro. We want them to address big macro questions, so I proposed clustering as a methodology for doing that. There is some evidence that clustering will indeed work.

There are lots of additional things that should be said about forecasting tournaments. One is the question, what’s the most important takeaway to give someone who wants to become a better forecaster? One answer is, the ten commandments in Superforecasting do itemize what I see as the best practices of the best forecasters in the tournament. If you wanted that in a nutshell, that would be it.

There is a deeper issue here, and the philosopher Michael Polanyi raised it when he talked about the challenge of teaching people how to ride a bicycle. He noted the absurdity of trying to teach people how to ride a bicycle by giving them a tutorial in Newtonian mechanisms—mass force, acceleration, angles—because this would be utterly fruitless. To learn how to ride a bike, you need to get on a bike and start riding it.

There is some truth to that in the forecasting tournament realm. To learn how to make more granular probabilistic judgments of things you care about, you need to get into the tournament and start making probabilistic judgments of things you care about. That is the most direct way of doing it. I’m not saying that abstract tutorials are useless because I’ve already shown you that the training module we used, which took about an hour, did produce about a 10 percent improvement. I don’t think that’s the dominant long-term driver of performance. There are some abstract concepts that can help you extract the right lessons from experience more rapidly, but you have to have the experience.

Another issue that people raise that’s quite interesting has to do with the limits on predictability. Over lunch, Danny Kahneman brought up Persi Diaconis, the statistician and magician at Stanford, who has the seven shuffle rule: How long does it take for a perfectly organized deck of cards to become perfectly disorganized so there’s no information in it? It takes about seven shuffles.

How long does it take before there is no longer any reliable signal about the future? For example, we showed a receiver operating characteristic curve that suggested the superforecasters were able to see about as accurately 400 days out as regulars were about 80 days out. Seeing much further than that, we didn’t ask that in the IARPA tournament. What I know from earlier tournaments in which we asked questions about five years into the future, is that it’s very hard for anyone to do appreciably better than the dart-throwing chimpanzee. When they do better than that, they don’t do better than simple extrapolation algorithms. It’s very difficult.

There are limits on predictability. What we’ve shown in the IARPA tournament is the temporal domain within which it seems possible to cultivate, train, and select people who can make a difference. You should expect that as you go further and further out into time, there is going to be some degradation. That doesn’t mean you shouldn’t still look for early warning indicators of scenarios, but there are going to have to be rolling early warning indicators; they’re going to have to be continually updated from year to year. I still think that’s a good way to go, but we do have to come to existential terms with the limits of our foresight.

There was one other thing that Danny mentioned over lunch that I thought was wonderful. It was one of my favorite studies in psychology. He was remembering a fire hydrant version of it, I was remembering a Dalmatian dog version of it: Bruner and Potter. The former Director of the CIA felt it was a direct parallel to a CIA analyst in East Germany, their judgments. The idea is that you show people an ambiguous stimulus that gradually takes on more and more information. With a Dalmatian dog, you’d see little splotches, and then it would coalesce into a dog.

Some people start from the beginning where there’s hardly anything there and it gradually builds up. Other people start in the middle. It’s already built up halfway, maybe eight steps in a fifteen-step sequence. Which group is going to be faster at recognizing the shape of the dog? Would it be the people who started at zero and saw the patches gradually build up from there? Or would it be people who started at step eight where there is some degree of pattern already there?

The answer to that question is, you’re better off having had less exposure to information because you’ve created a lot of misleading stories about what’s going on early on. It’s just too early to figure out. You get locked into explanatory lock-in, or story lock-in, and your accuracy is degraded. Experience is worse. John McLaughlin made the observation that when he was Deputy Director of the CIA, the analysts who were quickest to recognize that East Germany was disintegrating were the people who were new to the case; the old veterans were the slowest. He said that there were many other examples he could think of inside the agency that took that form. There’s a good deal of psychological truth to that idea.

Stewart Brand: Familiarity breeds blindness?

Daniel Kahneman: Familiarity with inferior information.

Tetlock: We talked a little bit about the National Intelligence Council and the mandate they have, where every fifteen to twenty years they’re supposed to do these projections. The Global Trends 2030 is the most recent one. I’m going to talk a little bit about what is in Global Trends 2030 and the efforts they make to anticipate the unanticipatable, namely, black swans. The term “black swan” is an interesting one. It causes a certain amount of semantic conceptual confusion.

What you have are swans of varying degrees of grayness, and when you look at the black swans that they generate—on slide sixty-six—it’s a usual suspect list. Sitting around this room, we would probably generate something very similar to this. What are the black swans? Pandemics, climate change, European Union collapse, China collapse, reformed Iran, nuclear terrorism, cyberattacks, solar storms. These are pretty familiar black swans. They’re grayish swans.

The black swan game does require you to do something that is almost the opposite of what our superforecasters try to do in the tournaments, which is that you don’t care about your false positive rate anymore. You just throw it away, you take it to town.

What kind of things would a real black swan generator generate?

I have a little list here on slide sixty-eight of things that are more genuinely black swan-ish, but they’re so black swan-ish they’re going to make you laugh: A conclusive demonstration of parallel universes, leaps in gene-splicing technology (eugenics on steroids—average IQ of children in China in 2050 is 180—at least in China because the Chinese don’t have our ethical reservations about this particular type of technology), leaps in anti-aging treatments (upper-bound closer to 200), new game theoretic means of adjudicating disputes, discovery of another advanced civilization in the Milky Way, ICBMs become obsolete, new Ice Age. They’re things that strike us pretty much as preposterous.

When you look at the efforts to generate black swans going back before the term “black swan” had even been coined, I mean, the Rand Corporation has been doing this for many decades. You look at their reports from the 1960s, bringing together the best and the brightest to anticipate what’s going to happen, and you find they predicted things like an accidental U.S.-Soviet nuclear war, a Maoist invasion of Southeast Asia, a single cure for cancer, permanent lunar bases, all by now. But they missed things like the rise of China, the collapse of the USSR, Islamo-Fascism, Hubble, the Large Hadron Collider, the Internet, nanotechnology. We missed a lot of stuff, things that people in the 1960s would probably regard as almost as bizarre in some cases as some of the things I just listed.

Obviously, when you’re talking about events that have probabilities of .000001 or lower, it’s very hard to measure how well-calibrated people are, or how discriminating they are in their probability judgments. What can you do with black swans? Nassim Taleb goes toward the antifragilization solution. Are there things you can do within a probabilistic accuracy framework to cope? There are things that we have both a moral and intellectual obligation at least to explore. We should think about early warning indicators of whether to run scenario trajectories toward particular classes of black swan-ish events. We could also think about coherence indicators. Even though we can’t assess the accuracy of people’s judgments of extreme low probability events, we can at least assess how coherently they’re assessing low probability events. We’ll just stipulate that coherence is a necessary but not sufficient condition for accuracy.

Robert Axelrod: Why is this necessary?

Tetlock: For example, if I were to ask you how likely it is in the next week that you’re going to be paralyzed in a car accident, then I asked you how likely in the next year and the probabilities were the same, that would be a suggestion that your judgments are …

Axelrod: It suggests that they’re not optimal.

Tetlock: Yes.

Axelrod: I agree with that, but it’s not necessary. I could still be pretty good.

Tetlock: I would suggest that what you would want from forecasters, even though you can’t directly assess the accuracy of people coping with black swan-ish events, you do at least want them to become more coherent.

Dean Kamen: Define coherent for this conversation.

Tetlock: The likelihood of a subset should not be greater than the likelihood of the set from which the subset has been derived.

Kahneman: Consistent with the basic axioms of probability theory.

Axelrod: The question is, if you force people or dramatically nudge them in the direction of coherence, will they get better? The assumption is that they will.

Tetlock: It’s a reasonable thing to try. At any rate, I don’t think there is a magic bullet solution for this, but I would say that we shouldn’t think about black swans as being a categorical phenomenon. We should think of it as a continuum of varying degrees of grayness insofar as we’re able to, through skillful question cluster generation, create early warning indicators of certain categories of black swan-ish events—outcomes. That’s a useful thing to do. The precautionary principle is problematic because it renders us too vulnerable to worst case scenario imaginings.

Brand: One thing that’s important to say about the things that are seemingly impossible—really black swans—is that there are so many of them out there. There’re the things that you deem probable and things that you would deem possible. Scenarios always have to stay within plausibility. Reality has no such restraint. In the reality spectrum, there’s no end of things that are clearly impossible. There are so many of them that, statistically, ones that we can’t even name are going to be part of what happens in this century. That’s one of the reasons that long-term forecasting has problems, because the other story about black swans is that they’re totally unexpected and they’re consequential.

Rodney Brooks: Just for the record, I want to point out that in Australia all swans are black.

Tetlock: Before we move from forecasting and thinking about possible futures to thinking about possible pasts and historical counterfactuals, is that a substantial interest to IARPA—a program they’re going to initiate fairly soon in some form—is going to be a competition involving humans, machines, and hybrids. In chess, they call them “centaurs” in which humans and advanced chess programs play together against other humans.

IARPA does have a deep interest in this, and psychologists, going all the way back to 1954 and a book that Paul Meehl wrote—Clinical versus Statistical Prediction—have had an interest in this. A dominant view in psychology for a number of decades—it may still be the dominant view—is that when you can construct a statistical model that mimics the queue utilization policy of the human judge, the statistical model will perform as well as or better than the human judge virtually always.

The model of the person either ties the person or outperforms the person, which is interesting. In fact, one of the scriptures that emerged in that literature is when you have a human being who has a model, don’t let the human being tweak it. A human being will simply make it worse. Not all meteorologists and macroeconomists agree with that. There have been various efforts in the last decade-plus to demonstrate that there are conditions under which well-informed experts can piggyback on models and do better with models. IARPA is particularly interested in this, so they don’t like the pessimistic Paul Meehl view. They’re looking for ways in which humans and machines can be more than the sum of their parts.

Brooks: I just want to come back to the black swan for a minute. The black swan events here mostly are science and technology, the suggested ones. Maybe that’s because we don’t expect human behavior to fundamentally switch in some ways.

Tetlock: The radical embrace of forecasting tournaments, for example. We do politics with forecasting tournaments. That would be a black swan event.

Brooks: I’m just running open loop here. We look back over the last 150 years, quantum mechanics was a black swan; no one expected quantum mechanics. Fission, as a technology, was a black swan. Dark matter is a black swan—unexpected. How often do black swans happen in physics? Has it been constant over history? Is it a function of how many scientists there are? Can we have a meta theory of black swans that is going to be more physics or less physics black swans? We’re worrying about certain things in society, if there’s a high probability there’s going to be something completely changing...

Margaret Levi: That comes back to the discussion we were having about Moore’s Law, whether it’s a law or not. Something happens that a whole bunch of people get on the bandwagon about, which came out of wherever it came out of, and suddenly there is a huge shift in behavior that you couldn’t have easily predicted before because you don’t know this fact is going to happen. We see that in politics. We see the Reagan-Thatcher Revolution changes mindsets.

Tetlock: That’s not quite like dark matter or quantum mechanics.

Levi: They’re not black swans in the same way, but they still are these huge shifts.

Rory Sutherland: Same-sex marriage would have been, in the 1990s.

Levi: There are a bunch of them once you start thinking that way.

Tetlock: Same-sex marriage is an amazingly fast transformation by sociological standards.

Levi: So is the Thatcher Revolution, just a switch almost on a dime.

Tetlock: Yes. But same-sex is less dramatic because there have always been free-market conservatives. Same-sex marriage was an extension of civil liberties to a domain it hadn’t been extended to before.

Levi: The point is not about the particular ones but about the phenomenon of these things that come in and change the way people think, and there is a continuum of them.

Tetlock: If I had inserted into my black swan list there, and not just parallel universe stuff, but I also had inserted something like the end of religion by 2100. Karl Marx came fairly close to saying something like that in the 19th century.

Levi: He did say something like that.

Tetlock: A number of other social scientists have expressed similar sentiments—the end of ideology, the end of religion. Forecasting tournaments are in some sense an idealistic effort to reduce the influence of ideology and make people more evidence-oriented. It’s a good point that it’s very difficult for us human beings to conceive of black swans that involve mutations of human nature.

D.A. Wallach: Looking at all of those cases, it’s maybe a mistake to think of them as an instantaneous pivot. The civil rights movement, or gay marriage, or any of these things are built on a real foundation. It’s almost as if you have all these constituent precursors that enable one switch to flip, and it looks like it happened in an instant.

Tetlock: That’s right.

Wallach: You could predict black swans by asking what things are already arrayed in such a way that a single factor can have a massive impact?

Levi: Quantum mechanics has that characteristic. People were building up to that.

Tetlock: I wanted to leave you with the impression that I am optimistic about the potential utility of forecasting tournaments to improve how we conduct high stakes policy debates. As we move into this next part of the presentations with counterfactual history, the imaginary control conditions, and policy debates, things are going to take a somewhat dark turn. That doesn’t mean that things are hopeless, but it does mean that there are some deep difficulties here that we need to wrestle with.

There's a picture of two people on slide seventy-two, one of whom is one of the most famous historians in the 20th century, E.H. Carr, and the other of whom is a famous economic historian at the University of Chicago, Robert Fogel. They could not have more different attitudes toward the importance of counterfactuals in history. For E.H. Carr, counterfactuals were a pestilence, they were a frivolous parlor game, a methodological rattle, a sore loser's history. It was a waste of cognitive effort to think about counterfactuals. You should think about history the way it did unfold and figure out why it had to unfold the way it did—almost a prescription for hindsight bias.

Robert Fogel, on the other hand, approached it more like a scientist. He quite correctly recognized that if you want to draw causal inferences from any historical sequence, you have to make assumptions about what would have happened if the hypothesized cause had taken on a different value. That's a counterfactual. You had this interesting tension. Many historians do still agree, in some form, with E.H. Carr. Virtually all economic historians would agree with Robert Fogel, who’s one of the pivotal people in economic history; he won a Nobel Prize. But there’s this very interesting tension between people who are more open or less open to thinking about counterfactuals. Why that is, is something that is worth exploring.

When I did my early work on expert political judgment, one of the things I did was look at experts’ judgments of historical counterfactuals—possible paths as well as possible futures—and it turns out that judgments of possible pasts and possible futures are linked in interesting ways to each other. You could ask experts a question of what they did in the early ’90s about Reagan: If Ronald Reagan hadn’t been President of the United States in the 1980s, would the Soviet Union exist in the 1990s?

If you happen to be a conservative, the answer to that question is pretty obvious: probably so. If you happen to be a liberal, the answer to that question is pretty obvious: probably not. If anything, they think Reagan slowed down the transformation. These kinds of counterfactual disputes come up all the time at economic policy debates, foreign policy debates, virtually any policy debate has some kind of counterfactual dimension to it.

Beliefs about the Reagan counterfactual were so correlated with political ideology in that study that they were statistically interchangeable with ideology. They belonged as much in an ideology scale as any other item in an ideology scale.

The interesting thing about counterfactuals and ideology is they don’t typically feel imaginary. When people say things like, “I think if Reagan hadn’t been President, we’d still have a Soviet Union.” When someone says something like that, they feel it’s a factual assertion, not a counterfactual assertion. It’s very interesting the way imaginary worlds take on a tangible meaning in much political discourse.

Again, I’ve been influenced by Danny’s work on counterfactuals and close-call counterfactuals. There are two different aspects of counterfactual reasoning that are important to distinguish. Liberals and conservatives don’t disagree over whether Ronald Reagan was almost assassinated in 1981. That’s a close-call counterfactual. It’s not something that provokes a lot of disagreement. They don’t disagree over whether Hitler could have easily perished in the trenches of World War I.

There are lots of things they don’t disagree about on the antecedent side of counterfactuals. Those are all things that are readily, imaginably different. The other world feels close at hand. Where they do disagree is where they go from the imaginary antecedent—Reagan not being the President of the United States—to the consequence of whether the Soviet Union exists or not. Ideology infuses the causal linkage between antecedent and consequence. Because counterfactuals are counterfactual and nobody can visit these alternative worlds to check out whether or not there was a Soviet Union in the world in which Reagan wasn’t President, there’s a great deal of potential for ideological mischief. It’s as if you’re running experiments and you always get to invent the data in the control group. How fast would science advance under those conditions? It would slow things down.

Counterfactuals are problematic in many ways. We were talking a lot earlier this morning about mental models and explanations. Exploring counterfactual beliefs is a wonderful way to tease out the mental model that’s underlying your view of reality. For example, if I were to say to you, “If Hitler had died in World War I, World War II would never have occurred. There never would have been a Nazi regime, there never would have been a Holocaust, there never would have been World War II.” Or if I said to you, “If the Archduke hadn’t been killed in Sarajevo in June of 1914, then no World War I, and then no Bolshevik regime, and then no Nazis,” and that leads to a totally different 20th century. If I say things like that to you, some people are inclined to allow me to go off on this flight of counterfactual fancy and let history diverge further from the actual world. There is something I call “deviation amplifying second order counterfactuals” as you go further and further away from reality.

Other people are inclined to bring history back on track. They’re inclined to say, “If Hitler had died, some other right-wing guy would have taken over. He might have been equally nasty and we would have had events very similar to World War II, or World War I. It was a multipolar and unstable European system. There was bound to be a conflagration at some point or another.”

Those are counterfactuals that bring history back on track. Whether you prefer counterfactuals that let history stray far away from the observed world, or only let history temporarily go off track—almost everybody lets history temporarily go off the track because we have this deep intuition that things are mutable at the level of human beings. We know that human beings are quite fallible, and we know that little accidents are certainly possible.

The tendency for the believers in deviation attenuating counterfactuals is to restore history. That does tell us something quite interesting, not only about ideology, but about the causal frameworks that are guiding their reasoning.

Part 2

There is one bit of speculation that I’ll offer you. There is some truth in the earlier work on expert political judgment which is, the more open you are to plausible counterfactuals, the more open you are to possible ways in which the future could unfold.

Kamen: That has to be true because the alternative in the extreme would be the future is predestined and nothing we do can change it; we’re wasting our time sitting here. If you have an infinite amount of information, there’s nothing left to chance. Probability has no meaning if everything is deterministic. And if it is, it has to be equally symmetric going backward in time and forward. You can’t have one of those without the other.

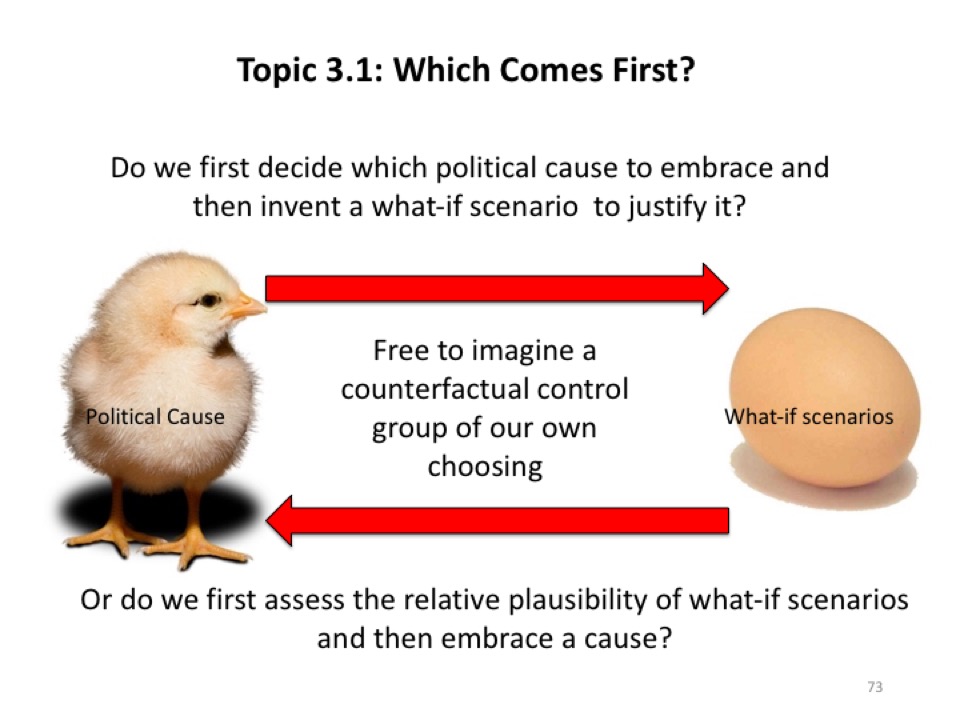

Tetlock: Somebody asked a question this morning about whether something was merely a justification or not. Maybe it was a question directed at Bob. You can argue that the reason that a conservative believes that the Soviet Union would still exist if Reagan had not been President is because that happens to justify an ideological world view they have. Or you can argue it’s part of the mechanism that drives the person to hold those views.

Slide seventy-three here—the chicken or the egg problem, which came first—do people invent counterfactuals to justify policy positions they want, so they’re basically secondary epiphenomenal smoke-to-fire sorts of things? Or are counterfactuals driving people toward particular world views?

Levi: Using counterfactuals to understand history and what happened in the past, there are methodologies to do that. They’re not perfect. That’s part of what Fogel did, that’s part of what we were doing with analytic narratives when we were together as fellows at the Center. You could figure out if another path was plausible or had been taken and what the testable implications of that would be, and you can figure out ways to differentiate between the causes of various kinds of paths through history. Is that kind of methodology at all transportable into thinking about predictive worlds?

Tetlock: Every time you want a multiple regression equation to predict which candidate you’re going to prefer in an election, that multiple regression equation has counterfactual entailments. It implies that if your income had been $50,000 a year lower, you’d be more likely to vote Democrat by .2. It’s woven into the fabric of statistical models of history.

Levi: The interesting issues with counterfactuals are what the consequences of different paths would be, right?

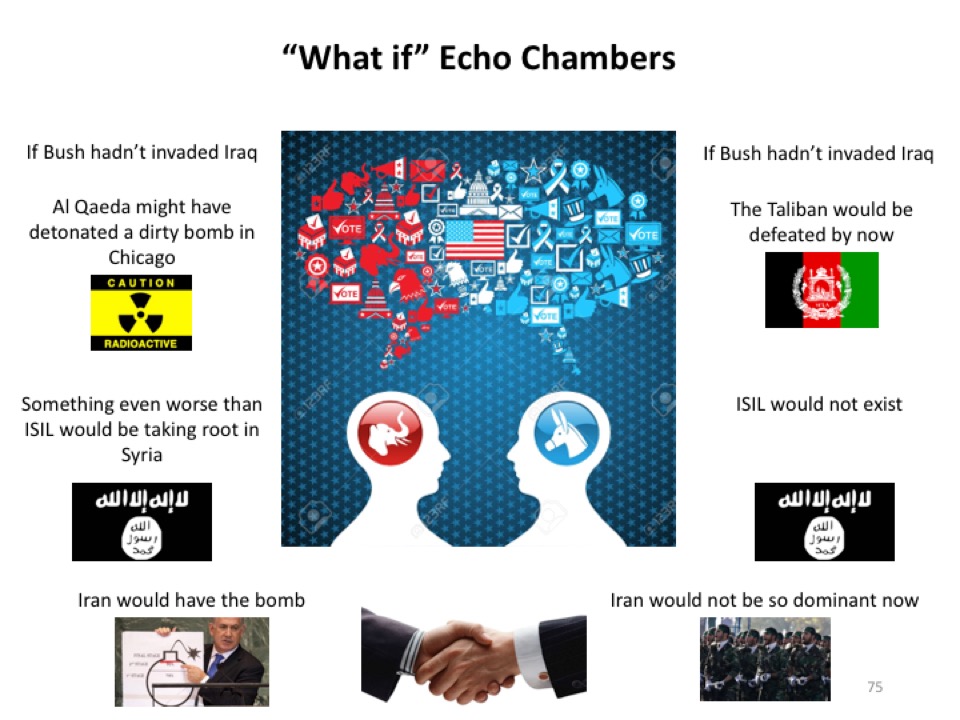

Tetlock: I think so. The reason I warned you about the pessimism of this section is this one here about the “what-if” echo chambers.

It’s the ease with which people invent counterfactuals to support their position vis-à-vis the invasion of Iraq. Look at it for yourself. It’s too depressing for me to look at so I don’t want to spend too much time on it, but it’s interesting. People do say these things, so they’re not being made up.

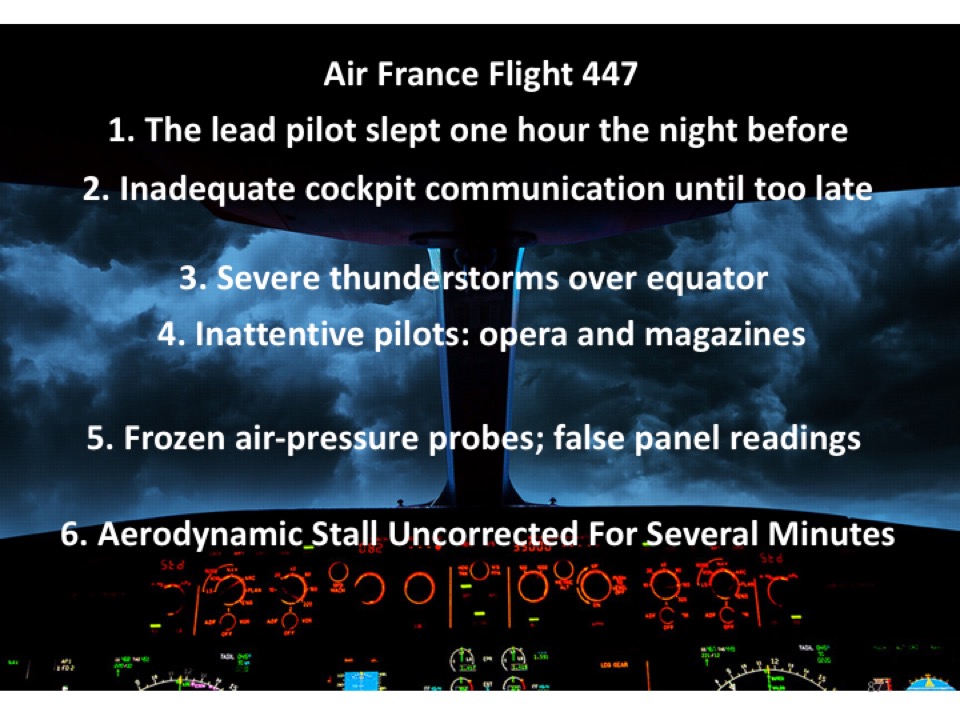

I wanted to turn to a couple of things a little further on, and one of them is the tragedy of Air France flight 447.

I should let Dean lead this conversation. I don’t know anything about flying airplanes, but there’s a whole confluence of factors responsible for the disaster of that flight listed here: the lead pilot slept one hour the night before, inadequate cockpit communication until too late, severe thunderstorms over the Equator, inattentive pilots listening to operas and reading magazines, there was frozen air pressure probes and false panel readings, and there was an aerodynamic stall that was uncorrected for several minutes. You combine all of those things and you get the death of 250-plus people in the mid-Atlantic.

There are various ways of thinking about this. The bottom line is, and this comes back to our discussion about stories and prediction. The NTSB, which does these careful and analytical postmortems on accidents, can do an exquisitely good job of explaining why these crashes occur, but it can’t even come remotely close to predicting them. There’s a radical disjuncture between what’s possible from an explanatory point of view and what’s possible from a predictive point of view. It’s interesting from that perspective.

Axelrod: They can, for example, say that in this model airplane the door isn’t constructed properly, so that should be fixed. Implicit in that is the prediction that if the door is fixed, they’ll have fewer crashes of that model of airplane. Their track record, by my understanding, is quite good. They have reduced all kinds of flaws in airplanes.

Sutherland: In this case, they were replacing the pitot tubes because there were known to freeze over. They just hadn’t gotten around to replacing the pitot tubes on this particular aircraft.

Axelrod: That is an implicit prediction that if you keep replacing the pitot tubes, you’ll do better.

Tetlock: There’s a very well-developed body of applied physics relevant to aviation. It’s full of numerological propositions that lead to if-then predictions that can be tested in laboratory or controlled settings, and they’re going to prove to be very accurate in simulations and so forth. All that is true. That’s a well-defined body of science, but when you mix that into the messy real world with messy human beings running the airplane, the potential for predicting specific accidents just isn’t possible.

Axelrod: This gets back to the question that we discussed earlier about the relationship between prediction and decision and quality of outcome. They can’t predict that the next airplane crash will take place in three years and two days, I grant you that, but they can predict that if you keep an eye on the pitot tubes, you’ll have fewer accidents. That’s what we want to know. By doing an analysis of the causation, they do provide guidance for decisions that are useful.

Tetlock: Yes. That’s very much in the spirit of what I am proposing with question clustering and decomposition of big questions into smaller resolvable indicators. The pitot tube indicator is a good example, just as food riots in a southern Chinese city might be a good example, future Chinese domestic instability, reduced traffic into North Korea as a sign that China is squeezing North Korea, those types of things.

Sutherland: There are other factors in the crash that are more embarrassing to deal with. There are cultural factors involved in that crash and interface design factors. You have two joysticks on the Airbus, when the autopilot quits, the plane enters what’s known as alternate mode where it doesn’t respond to inputs in the same way as it does normally because the flight computer is effectively bypassed.

Secondly, if you push the control sticks in opposite directions, the inputs cancel each other out, which was what led to the problem. It was interface design to a great extent, but that’s a much more difficult thing to fix. There is probably a tendency here where people attribute the causes of the problem to those elements which are easiest to rectify. It’s hard to change culture. It’s relatively hard to redesign the cockpit on an airbus completely whereas it’s easy to fix pitot tubes.

Kamen: You can go to the extreme to eliminate the academic discussion of the human factors by making one statement which is undeniable: As rare as all these accidents are, and they’re incredibly rare in a commercial aircraft, the ones that happen at night in bad weather are so overwhelmingly more common than good weather in daytime that any rational person looking at those two pieces of data would say, “I will never fly in meteorological conditions that aren’t ideal or at night.”

Instead of arguing about all of those servo connections, and pitot tubes, and different systems—they lost 34,000 feet before they knew they had a problem because everything was working just fine. If you clog up your pitot tubes, you don’t get ram air, you don’t get change in altitude. You can decide that there are all sorts of problems, but they’d have none of those problems if they weren’t flying in a thunderstorm at 45,000 feet at night. You could lump all of these things together, whether they’re human factors or everything else, and ask how many times is a multiengine aircraft involved in an accident in good weather. It’s virtually never, which is to say that no matter how small that other number is, it’s virtually all the crashes. The only ones that happen at daylight are ones because you had a microburst because there was a thunderstorm either ten miles ahead of you or ten miles behind you, like the one in Texas.

Sutherland: So the bravado of not avoiding the storm—it’s in the equator, isn’t it? You’ve got a particular zone.

Kamen: Anywhere where you’re near a thunderstorm, your probability, while it’s still low, is infinitely higher than if you’re not.

Tetlock: Looking at the accident in retrospect, you know the particular configuration of antecedents that experts say contributed directly or indirectly to the accident. Of that set of interlocking antecedents, you could say, “A subset would make me sufficiently worried, so I’m going to change my policy flying.” You’re not going to get on a commercial airliner when it’s a night flight and the weather is problematic.

Kamen: Personally, I’ll fly myself when I know those conditions are around because I will steer 100 miles north or south. I will do what I think is reasonable knowing that if you stay out of bad weather, you have almost no risk. The airlines, due to lots of things including the arrogance of most of these pilots, are not particularly good at aerodynamics, physics, meteorology, or anything else; they’re bus drivers with a big salary. I don’t want to be sitting in the back of an aluminum pit with a guy like that upfront when maybe he hasn’t slept, maybe he has another problem. That’s why I fly myself.

Axelrod: Individual flyers are many times more dangerous than a commercial passenger airliner.

Kamen: I was hoping somebody would bring that subject up.

Axelrod: Two-thirds of drivers feel they’re safer than average drivers, and probably 90 percent of general aviation flyers think that they’re better than average.

Kamen: That I think is true. They all do.

Axelrod: Therefore, you’re wrong in feeling that you’re safer flying yourself.

Kamen: I guess I would differ because I was making a point about under what conditions I would fly. That guy, even though he’s got a big 300,000-pound airplane with all these engines and a crew of three, he’ll fly within twenty miles of a thunderstorm, which is what they tell him to stay out of, and I will not.

You have to parse it down to not whether you think you have good skill or not, but do you have a set of metrics that you use? Unlike the somewhat arbitrary ones, you could take this large amount of data, look at every single accident you’ve seen and which ones are related to weather and which ones aren’t, and then say, “Why do we still have a set of rules like this?”

W. Daniel Hillis: I see some of the philosophical conundrums with counterfactuals, what I don’t see is what the consequences are of this discussion of how you use superforecasting, how you do superforecasting. What’s the consequences of this debate for what we do with this?

Tetlock: I see the counterfactual discussion as a sobering reminder of how easy it is to slip into theory-driven thinking about history that can structure your view of what did happen, what could have happened, and also structure your view of what could happen. The same underlying mental model is generating the counterfactuals and the condition of forecast.

Hillis: You’re suggesting that we do what differently?

Tetlock: Counterfactual reasoning, left to its own device, does tend to be pretty heavily ideological.

Hillis: Isn’t the basic idea of cause-and-effect based on counterfactual reasoning? Did anything cause anything in history?

Tetlock: It is, but the disciplines that have been able to make rapid progress in understanding cause-and-effect are the disciplines that understand why pitot tubes are crucial for maintaining an aircraft, but not for understanding why World War I occurred right after the assassination of the Archduke, or why Adolf Hitler happened to be associated with a concatenation of events mid-20th century. It’s a sobering lesson.

Hillis: So you would conclude to do what differently?

Tetlock: Here is one of the things we did back in 2005 in an effort to check hindsight bias: Hindsight bias is the tendency for people to have difficulty recalling what their prior predictions were. We set aside some forecasters whose task was to remember, without any counterfactual prompting, and another group of forecasters who were asked to think about alternative ways in which outcomes could have occurred. That counterfactual manipulation made them more aware of their past predictions. It debiased them.

It turns out that being susceptible to hindsight bias in that study was also associated with being more of a hedgehog and more biased. It all ties in in that fashion. It is a form of, I hate to use this expression from the 1960s, consciousness-raising. It’s a way of sensitizing us to some mental habits we all have. We’re doing it frequently, and we’re not aware of it, and it locks us into particular views of the future.

In aviation, you can take the various cause-and-effect components from applied physics and you can say, “This will lead to this, this will lead to that,” but when you get to the level of the full system with human beings operating in a complicated environment and reconstructing the accident, your ability to explain—which is formidable—confers no meaningful capacity to predict that particular real world event. It does, however, as Bob is pointing out, allow you to predict that if certain causal factors take on certain values, the risk of particular effects rises. Whether those effects will lead to an accident or not is another matter, but that’s just what happens when you can do experiments. It’s obvious you can’t do experiments with history, we don’t have time machines, but it’s salutary to think about.

Wael Ghonim: The Iraq one was much more complicated than the plane one because of the outcome. The Iraq one is still unknown. What would have happened if Iraq was not attacked? What is a framework you would do to assess the people who forecast? Let’s say we are having this now and we’re asking a bunch of superforecasters to come in and say, what would have happened if the U.S. did not invade Iraq? How would you assess the outcome?

Tetlock: Imagine just prior to the invasion of Iraq that you had superforecasters making predictions about what would happen if the U.S. invaded, and others making predictions about what would happen if the U.S. did not invade. Of the forecasters who are predicting on branch A, a subset of them correctly anticipate the deep sectarian conflicts that are going to follow with impressive accuracy, a subset of them do not. Would you take the subset that are more accurate on the observed branch of history and say that they were likely to be more accurate in their predictions on the counterfactual branch even though you can’t observe the counterfactual branch?

Ghonim: I don’t know. What would you do?

Tetlock: Well, I don’t think it’s meaningless. What would you do?

Axelrod: You could run the experiment, if you did ask people whether the United States would invade Iraq. Those that got that right and those that got that wrong could both be asked if there would be major sectarian conflict if they did invade Iraq, then you could see whether the people that made a prediction about the United States were more accurate about the prediction about Iraq’s future.

Kamen: If you’re saying to do that now and get the answer, isn’t that the whole point that he’s saying, because you have that bias?

Levi: You can’t do it now. It has to be preceding another event.

Axelrod: In this case, one prediction is about what the United States is going to do, and the other is about how the Iraqis are going to respond. Those are based on different kinds of information, different kinds of expertise. There wouldn’t be a lot of correlation, and the ones that got America wrong might be just as good about getting Iraq right. But if they were very similar, if they’re both about Iraq politics, then you expect the same people that got one right to get the other right.

Kahneman: I don’t know if this will help, but there is a word that hasn’t come up and that I feel is missing, and the word is “propensity.” We’re talking of systems, and systems have propensities to do things. This is something that we realize. It’s a broader notion in a way than cause-and-effect; it’s less discreet. You can recognize that improving the design of a plane will reduce the propensity. We were talking about propensity this morning with intent. An intent is a propensity. A strength measure, or the explosiveness of a situation, or the fragility of a system, all those are very well-described in terms of propensities, and counterfactuals are about propensities. I have always thought that there is something missing that we ought to be developing while thinking about propensity because the concept is very intuitive on everything that I’ve said here.

Tetlock: Whereas probability is not so intuitive.

Kahneman: Probability is much harder. To discuss propensities is a lot easier. Maybe that’s something that’s very premature, but I have the feeling that if we tried another angle to think about a causal system rather than only probability and causal systems, there is that intermediate notion that could be very useful. Just a thought.

Tetlock: You can run experiments in which you describe to people, say, that if the Cuban missile crisis resolved on October 29th, 1962, and you ask people, at what point did it become inevitable that the crisis would resolve peacefully? You could plot a propensity or a probability function showing how the inevitability of peace gradually rose until it became a done deal, it was going to be resolved peacefully. Or you can ask them the mirror image question, at what point did alternative, more violent endings to the Cuban missile crisis become impossible? Those should be mirror images of each other, and they are roughly. When they do these exercises, you can manipulate whether or not some of them are asked to think about alternative ways the Cuban missile crisis could have resolved violently.

You could describe to them ways in which a Soviet submarine and a U.S. destroyer could have collided. There are various points in the Cuban missile crisis where things could have escalated out of control, and you can ask them to think about those. When you do, the probabilities get out of control. They add up to way more than one. People see too much likelihood in too many scenarios. This can happen thinking backward in time, as in the Cuban missile crisis situation, but it can also happen thinking forward in time when you think about multiple futures and scenarios.

What I’m trying to emphasize here is that there are these intimate connections between how we think about the past and how we think about the future. They are derived from common mental models, essentially of causal propensities, and the cognitive biases that tend to contaminate thinking about possible pasts also infiltrate our thinking about the future.

Lee: I’m still ruminating over what Dean was saying earlier about the Air France flight, there is something about challenging assumptions that seems important in looking at counterfactuals. When I was thinking of what black swan event I would put, I thought, in 2030, we’ll discover that the Chinese have had working quantum computers for the past twenty years. That’s potentially a counterfactual, that there is an implicit prediction that the Chinese have working quantum computers that we will probably find out is not true, but the counterfactual is also somehow related to the black swan prediction. There is something about challenging assumptions in all of this that seems at least to touch the social process of forecasting.

Tetlock: Right. The Cuban missile crisis counterfactual could conceivably have culminated in World War III, so it could have gated us into a radically different world. There are other kinds of counterfactuals that alter other landscape features of our world that are disturbing, like imagining that the United States doesn’t successfully secede from Britain.

A series of counterfactual thought experiments that we ran on historians had to do with the rise of the West: At what point in history did it become inevitable that a relatively small number of Europeans would achieve geopolitical dominance? There again, you have lots of animated debates, including E.H. Carr’s line about sore losers. A very popular counterfactual in China right now is about the Admiral Zheng He and the fleet. Which dynasty was it? Was it the 14th century or the 15th century?

Sutherland: The fleet who went to Africa? Star Loft.

Tetlock: They dismantled the entire fleet, and it was a formidable fleet, and they had very considerable power projection capabilities, certainly much more formidable than the Spanish and Portuguese ships that were shortly thereafter starting to sail through the Pacific.

You can get people to think about ways in which China could have emerged, or ways in which Islam in the 8th century or the 15th century could have made more successful intrusions into Europe. These are counterfactuals that have a lot of cultural resonance in other parts of the world. E.H. Carr was dismissive, right? He called it the sore loser history, but they are popular and tenacious.

Axelrod: Restoring the caliphate is a powerful current example.

Kamen: Each of the things you’re talking about end up asking people to predict correctly whether we could imagine going back in time to some specific event that in itself is so specific you’re never going to get the advantage of doing it multiple times, which is inconvenient when you’re trying to use probability. But back to the airplane one, I was sitting there trying to think if there are certain kinds of events that have the same human-versus-other propensities that happen on a large enough scale, happen in different environments that are different enough to do multiple separate experiments but close enough—for instance, car accidents. There are 42,000 people that get killed every year pretty reliably, plus or minus a thousand in the last fifteen years. In one-third of all fatal car accidents in the United States, at least one of the drivers was drunk.

How can it be that we allow people to drink and drive? That’s way worse than “weather is mostly involved.” It’s insane. People have to drive and people can drink, but on what basis have we decided it’s your privilege to drink and kill somebody? I don’t know. Relatively recently, when they decided that people that text are even more likely to have accidents, there has been such a quick response that in most states now, if they catch you doing this, you’re in a big trouble. They won’t tell you—like they do to pilots—if you have anything to drink, you cannot fly. It’s not up to you to decide. If you had anything to drink, you can’t drive for eight hours; that’s the law. I don’t know how many people violate the law, but that’s the law.

The irony is that we all go to restaurants at night when we’re tired and have a glass of wine or two, and then get out in that rainy night and drive home. If you could get your experiment by saying find a place where that’s not legal, or find a place where they don’t drink at all—it’s a dry state or whatever—you ought to be able to construct models that were as if you could do your multiple models by finding different populations that meet the criteria of different hypotheses, then run your experiments in those different places.

Tetlock: I agree. You’re getting closer to the ideal laboratory experiment there.

Kamen: What I’m trying to do is give you a large enough set off criteria that you can effectively get the laws of large numbers to help you figure out whether your probabilities work or not.

Tetlock: Most policy debates unfold in a world where the law of large numbers does not give us a lot of leverage.

Levi: But there are some. I’m trying to think of cases which aren’t like accidents where there is an individual who may affect other individuals, but where there is a social and political—closer to some of your international effects. One of the things I think of that has large numbers is strikes. There have been lots of strikes or protests, in lots of places, lots of different contexts. They may not be as large numbers as accidents, I’m not sure, but there are those kinds of social phenomenon which should be susceptible to some kind of predictive capacity, and even if you can’t create a real control, you probably have some contextual variables that come pretty close to control.

Tetlock: I’m going to pose a couple of questions to you, and we can revisit them tomorrow morning. The U.S. intelligence community does not believe it’s appropriate to hold analysts accountable for the accuracy of their forecasts. It believes it’s appropriate to hold analysts accountable for the processes by which they reach their conclusions. It’s not appropriate to judge them on the basis of the accuracy of their conclusions when their conclusions are about the future.

Kamen: The operation was a complete success, but the patient died.

Tetlock: Yes, you could say that the analyst engaged in best analytical practices. They got it wrong, but they did everything right because this is a stochastic environment and people doing everything right, sometimes they’re going to get it wrong. We think it’s only fair to hold people responsible for things that are under their control. That’s the process. Let me give you a couple of other examples.

Public school teachers: is it appropriate to hold them accountable for student test performance, which is an outcome, or is it appropriate to hold them accountable for how they teach? Another example: corporate personnel managers—people who do hiring and firing—is it appropriate to hold them accountable for getting the numbers right on women and minorities? Outcome. Or is it appropriate to hold them accountable for the process by which they make hiring and firing decisions?

In each of these cases, you’ve got a distinction between process and outcome that ties into some of this earlier discussion about explanation and prediction and the degree to which they are or are not tightly coupled. If you believe that explanation and prediction were totally tightly coupled, would it make much of a difference to analysts whether or not they were being held accountable for process or outcome?

It’s late afternoon, so you don’t have to answer that question, but it is partly a matter of trust. Conservatives don’t trust public school teachers and their unions very much; they like accountability for test performance. Liberals are more inclined to defend them, and they don’t say there shouldn’t be some accountability, but they are more inclined to go for process. Liberals don’t trust corporate personnel managers to be nonsexist, nonracist, so they often prefer accountability for demographic outcomes, whereas conservatives are more inclined to say you should judge them by process, you shouldn’t try to force an outcome.

With intelligence analysts, it’s somewhat murky whether people break in favor process or outcome. It’s not a dichotomy; you also have hybrids. You have process and outcome hybrids. Many companies do embrace hybrids of various sorts. Are you interested more in superforecasters or are you interested more in superexplainers? If you’re interesting in superexplainers, you should presumably be incentivizing process for it. Would that be right, Danny?

Hillis: The intelligence community is pretty funny because it’s a machine that’s constructed to produce answers. The intelligence analysts are cogs in that machine, so they don’t have much choice of what information they get to analyze. Their job is to gestate information that they’re given to analyze and somehow refine that product and feed it up to the next level where it’s input to the next analyst. It’s fair in the case with the way that it’s constructed that all you can ask is that they did what they were supposed to do with the information that they got.

Tetlock: So you judge them by their analytical procedures.

Hillis: What happens is policymakers say, “We want to know these things,” and somebody analyzes the processing machine which vacuums up information and pushes it through the system and produces reports at the end. It’s a crazy way to do it if what you’re trying to do is inform decision making, but that is the way that it works.

Tetlock: In the fourth year of the IARPA tournament, we ran an experiment in which we manipulated whether the forecasters were accountable for their judgment process (not accuracy), for accuracy the way it normally is in a tournament, or for a combination of the two. I was quite interested in how the intelligence community reacts to that. Their view is that explanation is important to what they do, and they would even be willing to give up a few units of accuracy in order to get better explanations.

There does seem to be a tradeoff between the two when you incentivize people just to focus on process: “I don’t care if your predictions about Germany or Greece or China are right, I’m going to judge you on the basis of the quality of the explanations you offer for your forecasts.” We can come back later as to what exactly that means. It’s a hard thing to define.

The net effect is your explanations do get better on various metrics, but your accuracy goes down. The net effect of being purely outcome accountable is your explanations are not as good but your accuracy is better. And the net effect of hybrid is you’re in-between. You’re not as good as the process people on process, you’re not as good on accuracy as the outcome people; you’re in-between the two. This work would suggest there is some sort of tradeoff here. Should there be a tradeoff? In an ideal situation, surely, good explanations and good forecast, incentivizing them should be mutually reinforcing, not somehow distracting. But for reasons that I do not fully understand, the world doesn’t seem to work that way here.

Hillis: Part of it is because of the fear of the intelligence agency being over-responsive to the desires of the customer. It’s a funny system where you’re deliberately not given the feedback of customer satisfaction.

Tetlock: Right. We’re not talking about real analysts, we’re talking about our research subjects in the forecasting tournaments. I was talking about how they hold analysts accountable, and we wanted to do an experiment that would mimic how they hold analysts accountable, which is for process. If you mimic their accountability system in the forecasting tournament, the net effect is to degrade accuracy but improve explanations.

What does it mean to generate a better explanation? This would take us hours. One definition that’s pretty clear-cut is a better explanation is one that when you hand it to somebody else, it helps them make a better explanation. It’s like an assist in hockey. There’s an information value-added. The process people are generating better explanations in that sense. Even though it’s degrading their own accuracy, they are improving the accuracy of other people when their explanations are shared with others.

We were hoping in year four to create a special category of forecaster, superexplainers as well as superforecasters. Terry has done that. We do have a group of forecasters who are good at explaining, and we have a very small group who are good at both. But it’s unusual.

Ghonim: Is there a public interface where we could see what people are forecasting?

Tetlock: There was and there will be again.

Ghonim: We could have access to that?

Tetlock: I don’t know why not.

Hillis: The question is do you want to optimize the system for accuracy, or do you want to optimize the system for usefulness?

Tetlock: It’s interesting that there may be a tradeoff there.

Kamen: You have a good model for the kind of tradeoff you’re looking for. You can probably get data on it by looking at a system that’s got, unfortunately, millions of pieces of data—the U.S. court system. You started out by giving three or four examples of do we want to judge teachers by what the students produce.

Tetlock: Process or outcome, yes.

Kamen: Do we want to judge by such and such, well, we have things called judges in the courts. A way to restate your first hypothesis of tradeoffs is—the Founding Fathers had the great debates about do we want the rule of man or do we want the rule of law? Their theory was they had seen kings and they said, “As bad as law might be, it never gets it right. We’d rather have the rule of law so everybody knows if you jaywalk, you might get a ticket, but you’re not going to get shot.” They knew it was a pretty bad compromise because then they created a jury system. We have some pretty strong incentives in a tradeoff by which we have knowingly said, “We’re going to let a lot of people go free to avoid putting an innocent person in jail.” We don’t have a symmetric system by which it says “Be accurate as your highest authority.” We say “Beyond a reasonable doubt.” Just for your experiments, you could say if we change the thresholds between the rule of law and then you go to tell a story—the whole reason we have all these passionate lawyers is they go out and explain stories to try to get around the rule of law.

Tetlock: We’re not going to get to the bottom of this this afternoon because my blood sugar level is too low. The constitutional law professor of Barack Obama, Laurence Tribe, wrote a paper in 1971 in Harvard Law Review, called “Trial By Mathematics” in which he looks at exactly the question you are raising—treating the legal system as a forecasting system.

Kamen: Sounds like a good idea.

Tetlock: He says it would be it would be a bad idea to keep explicit score. It would delegitimize the system. You don’t want a specific tradeoff rule that we’re going to let nine guilty people off in order to avoid convicting one innocent one. You don’t want the false positive-false negative tradeoffs to be explicitly spelled out. You want vague verbiage. You want beyond a reasonable doubt. And that is rhetorical shock and awe. You want the masses to bow and to accept the legitimacy of the system; you don’t want them wondering, “Hey, why that number?” The legal system is not one that incentivizes, or is designed to, accuracy.

Kamen: That was my whole point. You would have an existing model to run your thesis. We have a system which has the two. I can optimize for accuracy or I can have some, according to pure logic, perverse incentive to say, “I will put stronger weight on never putting the innocent person away,” and then, within the constraints of your rule-based system—the rule of law—we then break in these people who are the storytellers to explain why they did what they did, which might have been beyond the bounds of law, are still appropriate behavior.

Tetlock: The last thing I’ll say about this is that when you talk to superforecasters about the Laurence Tribe article, “Trial By Mathematics,” they think it’s dumb. They really dislike it. They think it’s retrograde.

Kamen: Let them predict the outcome of trials. Give them the briefs.

Tetlock: They are quite fanatical scorekeepers. They believe in scorekeeping. I don’t know what the reaction of the audience is to the Tribe argument the way I’ve summarized it, and you can Google it and check it out for yourself, whether you think that’s a fair rendition of it. But assuming I have represented it reasonably accurately, how many of you would say that Tribe has a good point, or that Tribe is retarding progress of the legal system? Good point would be how many, Bob? How many would say it’s retrograde? How many have no idea?