SESSION ONE

Master Class 2007: A Short Course in Thinking About Thinking from Edge Foundation on Vimeo.

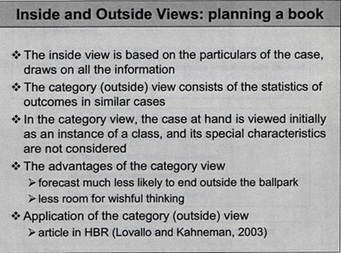

KAHNEMAN: I'll start with a topic that is called an inside-outside view of the planning fallacy. And it starts with a personal story, which is a true story.

Well over 30 years ago I was in Israel, already working on judgment and decision making, and the idea came up to write a curriculum to teach judgment and decision making in high schools without mathematics. I put together a group of people that included some experienced teachers and some assistants, as well as the Dean of the School of Education at the time, who was a curriculum expert. We worked on writing the textbook as a group for about a year, and it was going pretty well—we had written a couple of chapters, we had given a couple of sample lessons. There was a great sense that we were making progress. We used to meet every Friday afternoon, and one day we had been talking about how to elicit information from groups and how to think about the future, and so I said, Let's see howwe think about the future.

I asked everybody to write down on a slip of paper his or her estimate of the date on which we would hand the draft of the book over to the Ministry of Education. That by itself by the way was something that we had learned: you don't want to start by discussing something, you want to start by eliciting as many different opinions as possible, which you then you pool. So everybody did that, and we were really quite narrowly centered around two years; the range of estimates that people had—including myself and the Dean of the School of Education—was between 18 months and two and a half years.

But then something else occurred to me, and I asked the Dean of Education of the school whether he could think of other groups similar to our group that had been involved in developing a curriculum where no curriculum had existed before. At that period—I think it was the early 70s—there was a lot of activity in the biology curriculum, and in mathematics, and so he said, yes, he could think of quite a few. I asked him whether he knew specifically about these groups and he said there were quite a few of them about which he knew a lot. So I asked him to imagine them, thinking back to when they were at about the same state of progress we had reached, after which I asked the obvious question—how long did it take them to finish?

It's a story I've told many times, so I don't know whether I remember the story or the event, but I think he blushed, because what he said then was really kind of embarrassing, which was, You know I've never thought of it, but actually not all of them wrote a book. I asked how many, and he said roughly 40 percent of the groups he knew about never finished. By that time, there was a pall of gloom falling over the room, and I asked, of those who finished, how long did it take them? He thought for awhile and said, I cannot think of any group that finished in less than seven years and I can't think of any that went on for more than ten.

I asked one final question before doing something totally irrational, which was, in terms of resources, how good were we are at what we were doing, and where he would place us in the spectrum. His response I do remember, which was, below average, but not by much. [much laughter]

I'm deeply ashamed of the rest of the story, but there was something really instructive happening here, because there are two ways of looking at a problem; the inside view and the outside view. The inside view is looking at your problem and trying to estimate what will happen in your problem. The outside view involves making that an instance of something else—of a class. When you then look at the statistics of the class, it is a very different way of thinking about problems. And what's interesting is that it is a very unnatural way to think about problems, because you have to forget things that you know—and you know everything about what you're trying to do, your plan and so on—and to look at yourself as a point in the distribution is a very un-natural exercise; people actually hate doing this and resist it.

There are also many difficulties in determining the reference class. In this case, the reference class is pretty straightforward; it's other people developing curricula. But what's psychologically interesting about the incident is all of that information was in the head of the Dean of the School of Education, and still he said two years. There was no contact between something he knew and something he said. What psychologically to me was the truly insightful thing, was that he had all the information necessary to conclude that the prediction he was writing down was ridiculous.

COMMENT: Perhaps he was being tactful.

KAHNEMAN: No, he wasn't being tactful; he really didn't know. This is really something that I think happens a lot—the outside view comes up in something that I call ‘narrow framing,' which is, you focus on the problem at hand and don't see the class to which it belongs. That's part of the psychology of it. There is no question as to which is more accurate—clearly the outside view, by and large, is the better way to go.

Let me just add two elements to the story. One, which I'm really ashamed of, is that obviously we should have quit. None of us was willing to spend seven years writing the bloody book. It was out of the question. We didn't stop and I think that really was the end of rational planning. When I look back on the humor of our writing a book on rationality, and going on after we knew that what we were doing was not worth doing, is not something I'm proud of.

COMMENT: So you were one of the 40 per cent in the end.

KAHNEMAN: No, actually I wasn't there. I got divorced, I got married, I left the country. The work went on. There was a book. It took eight years to write. It was completely worthless. There were some copies printed, they were never used. That's the end of that story. ...

SESSION TWO

Master Class 2007: A Short Course In Thinking About Thinking Pt. 2 from Edge Foundation on Vimeo.

KAHNEMAN: Let me introduce a plan for this session. I'd like to take a detour, but where I would like to end up is with a realistic theory of risk taking. But I need to take a detour to make that sensible. I'd like to start by telling you what I think is the idea that got me the Nobel Prize—should have gotten Amos Tversky and me the Nobel Prize because it was something that we did together—and it's an embarrassingly simple idea. I'm going to tell you the personal story of this, and I call it 'Bernoulli's Error"—the major theory of how people take risks.

The quick history of the field is that in 1738 Daniel Bernoulli wrote a magnificent essay in which he presented many of the seminal ideas of how people take risks, published at the St. Petersburg Academy of Sciences. And he had a theory that explained why people take risks. Up to that time people were evaluating gambles by expected value, but expected value was never explained with conversion, and why people prefer to get sure things rather than gambles of equal expected value. And so he introduced the idea of utility (as a psychological variable), and that's what people assign to outcomes so they're not computing the weighted average of outcomes where the weights are the probabilities, they're computing the weighted average of the utilities of outcomes. Big discovery—big step in the understanding of it. It moves the understanding of risk taking from the outside world, where you're looking at values, to the inside world, where you're looking at the assignment of utilities. That was a great contribution.

He was trying to understand the decisions of merchants, really, and the example that he analyzes in some depth is the example of the merchant who has a ship loaded with spices, which he is going to send from Amsterdam to St. Petersburg – during the winter—with a 5% probability that the ship will be lost. That's the problem. He wants to figure out how the merchant is going to do this, when the merchant is going to decide that it's worth it, and how much insurance the merchant should be willing to pay. All of this he solves. And in the process, he goes through a very elaborate derivation of logarithms. He really explains the idea.

Bernoulli starts out from the psychological insight, which is very straightforward, that losing one ducat if you have ten ducats is like losing a hundred ducats if you have a thousand. The psychological response is proportional to your wealth. That very quickly forces a logarithmic utility function. The merchant assigns a psychological value to different states of wealth and says, if the ship makes it this is how wealthy I will be; if the ship sinks this is my wealth; this is my current wealth; these are the odds; you have a logarithmic utility function, and you figure it out. You know if it's positive, you do it; if it's not positive you don't; and the difference tells you how much you'd be willing to pay for insurance.

This is still the basic theory you learn when you study economics, and in business you basically learn variants on Bernoulli's utility theory. It's been modified, it's axiomatic and formalized, and it's no longer logarithmic necessarily, but that's the basic idea.

When Amos Tversky and I decided to work on this, I didn't know a thing about decision-making—it was his field of expertise. He had written a book with his teacher and a colleague called "Mathematical Psychology" and he gave me his copy of the book and told me to read the chapter that explained utility theory. It explained utility theory and the basic paradoxes of utility theory that have been formulated and the problems with the theory. Among the other things in that chapter were some really extraordinary people—Donald Davidson, one of the great philosophers of the twentieth century, Patrick Suppes—who had fallen love with the modern version of expected utility theory and had tried to measure the utility of money by actually running experiments where they asked people to choose between gambles. And that's what the chapter was about.

I read the chapter, but I was puzzled by something, that I didn't understand, and I assumed there was a simple answer. The gambles were formulated in terms of gains and losses, which is the way that you would normally formulate a gamble—actually there were no losses; there was always the choice between a sure thing and a probability of gaining something. But they plotted it as if you could infer the utility of wealth—the function that they drew was the utility of wealth, but the question they were asking was about gains.

I went back to Amos and I said, this is really weird: I don't see how you can get from gambles of gains and losses to the utility of wealth. You are not asking about wealth. As a psychologist you would know that if it demands complicated mathematical transformation, something is going wrong. If you want the utility of wealth you had better ask about wealth. If you're asking about gains, you are getting the utility of gains; you are not getting the utility of wealth. And that actually was the beginning of the theory that's called "Prospect Theory", which is considered a main contribution that we made. And the contribution is what I call "Bernoulli's Error". Bernoulli thought in terms of states of wealth, which maybe makes intuitive sense when you're thinking of the merchant. But that's not how you think when you're making everyday decisions. When those great philosophers went out to do their experiments measuring utility, they did the natural thing—you could gain that much, you could have that much for sure, or have a certain probability of gaining more. And wealth is not anywhere in the picture. Most of the time people think in terms of gains and losses.

There is no question that you can make people think in terms of wealth, but you have to frame it that way, you have to force them to think in terms of wealth. Normally they think in terms of gains and losses. Basically that's the essence of Prospect Theory. It's a theory that's defined on gains and losses. It adds a parameter to Bernoulli's theory so what I call Bernoulli's Error is that he is short one parameter.

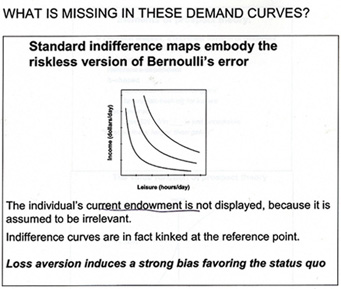

I will tell you for example what this means. You have somebody who's facing a choice between having—I won't use large units, I'll use the units I use for my students—2 million, or an equal probability of having one or four. And those are states of wealth. In Bernoulli's account, that's sufficient. It's a well-defined problem. But notice that there is something that you don't know when you're doing this: you don't know how much the person has now.

So Bernoulli in effect assumes, having utilities for wealth, that your current wealth doesn't matter when you're facing that choice. You have a utility for wealth, and what you have doesn't matter. Basically the idea that you're figuring gains and losses means that what you have does matter. And in fact in this case it does.

When you stop to think about it, people are much more risk-averse when they are looking at it from below than when they're looking at it from above. When you ask who is more likely to take the two million for sure, the one who has one million or the one who has four, it is very clear that it's the one with one, and that the one with four might be much more likely to gamble. And that's what we find.

So Bernoulli's theory lacks a parameter. Here you have a standard function between leisure and income and I ask what's missing in this function. And what's missing is absolutely fundamental. What's missing is, where is the person now, on that tradeoff? In fact, when you draw real demand curves, they are kinked; they don't look anything like this. They are kinked where the person is. Where you are turns out to be a fundamentally important parameter.

Lots of very very good people went on with the missing parameter for three hundred years—theory has the blinding effect that you don't even see the problem, because you are so used to thinking in its terms. There is a way it's always done, and it takes somebody who is naïve, as I was, to see that there is something very odd, and it's because I didn't know this theory that I was in fact able to see that.

But demand curves are wrong. You always want to know where the person is. ...

SESSION THREE

Master Class 2007: A Short Course in Thinking About Thinking Pt. 3 from Edge Foundation on Vimeo.

The word "utility" that was mentioned this morning has a very interesting history – and has had two very different meanings. As it was used by Jeremy Bentham, it was pain and pleasure—the sovereign masters that govern what we do and what we should do – that was one concept of utility. In economics in the twentieth century, and that's closely related to the idea of the rational agent model, the meaning of utility changed completely to become what people want. Utility is inferred from watching what people choose, and it's used to explain what they choose. Some columnist called it "wantability". It's a very different concept.

One of the things I did some 15 years ago was draw a distinction, which obviously needed drawing. between them just to give them names. So "decision utility" is the weight that you assign to something when you're choosing it, and "experience utility", which is what Bentham wanted, is the experience. Once you start doing that, a lot of additional things happen, because it turns out that experience utility can be defined in at least two very different ways. One way is when a dentist asks you, does it hurt? That's one question that's got to do with your experience of right now. But what about when the dentist asks you, Did it hurt? and he's asking about a past session. Or it can be Did you have a good vacation? You have experience utility, which is everything that happens moment by moment by moment, and you have remembered utility, which is how you score the experience once it's over.

And some fifteen years ago or so, I started studying whether people remembered correctly what had happened to them. It turned out that they don't. And I also began to study whether people can predict how well they will enjoy what will happen to them in future. I used to call that "predictive utility", but Dan Gilbert has given it a much better name; he calls it "affective forecasting". This predicts what your emotional reactions will be. It turns out people don't do that very well, either.

Just to give you a sense of how little people know, my first experiment with predictive utility asked whether people knew how their taste for ice cream would change. We ran an experiment at Berkeley when we arrived, and advertised that you would get paid to eat ice cream. We were not short of volunteers. People at the first session were asked to list their favorite ice cream and were asked to come back. In the first experimental session they were given a regular helping of their favorite ice cream, while listening to a piece of music—Canadian rock music—that I had actually chosen. That took about ten-fifteen minutes, and then they were asked to rate their experience.

Afterward, they were also told, because they had undertaken to do so, that they would be coming to the lab every day at the same hour for I think eight working days, and every day they would have the same ice cream, the same music, and rate it. And they were asked to predict their rating tomorrow and their rating on the last day.

It turns out that people can't do this. Most people get tired of the ice cream, but some of them get kind of addicted to the ice cream, and people do not know in advance which category they will belong to. The correlation between what the change that actually happened in their tastes and the change that they predicted was absolutely zero.

It turns out—this I think is now generally accepted—that people are not good at affective forecasting. We have no problem predicting whether we'll enjoy the soup we're going to have now if it's a familiar soup, but we are not good if it's an unfamiliar experience, or a frequently repeated familiar experience. Another trivial case: we ran an experiment with plain yogurt, which students at Berkeley really didn't like at all, we had them eat yogurt for eight days, and after eight days they kind of liked it. But they really had no idea that that was going to happen. ...

SESSION FOUR

Master Class 2007: A Short Course in Thinking About Thinking Pt. 4 from Edge Foundation on Vimeo.

Fifteen years ago when I was doing those experiments on colonoscopies and the cold pressure stuff, I was convinced that the experience itself is the only one that matters, and that people just make a mistake when they choose to expose themselves to more pain. I thought it was kind of obvious that people are making a mistake—particularly because when you show people the choice, they regret it—they would rather have less pain than more pain. That led me to the topic of well-being, which is the topic that I've been focusing on for more than ten years now. And the reason I got interested in that was that in the research on well-being, you can again ask, whose well-being do we care for? The remembering self?—and I'll call that the remembering-evaluating self; the one that keeps score on the narrative of our life—or the experiencing self? It turns out that you can distinguish between these two. Not surprisingly, essentially all the literature on well-being is about the remembering self.

Millions of people have been asked the question, how satisfied are you with your life? That is a question to the remembering self, and there is a fair amount that we know about the happiness or the well-being of the remembering self. But the distinction between the remembering self and the experiencing self suggests immediately that there is another way to ask about well-being, and that's the happiness of the experiencing self.

It turns out that there are techniques for doing that. And the technique—it's not at all my idea—is experience sampling. You may be familiar with that—people have a cell phone or something that vibrates several times a day at unpredictable intervals. Then they get questions on the screen that say, what are you doing? and there is a menu—and Who are you with? and there is a menu—and How do you feel about it ?—and there is a menu of feelings.

This comes as close as you can to dispensing with the remembering self. There is an issue of memory, but the span of memory is really seconds, and people take a few seconds to do that; it's quite efficient, then you can collect a fair amount of data. So some of what I'm going to talk about is the two pictures that you get, which are not exactly the same, when you look at what makes people satisfied with their life and makes them have a good time.

But first I thought I'd show you the basic puzzles of well-being. There is a line on the "Easterlin Paradox" that goes almost straight up, which is GDP per capita. The line that isn't going anywhere is the percentage of people who say they are very happy. And that's a remembering self-type of question. It's one big puzzle of the well-being research, and it has gotten worse in the last two weeks because there are now new data on international comparisons that makes the puzzle even more surprising.

But this is within-country. And within the United States, it's the same for Japan, over a period where real income grew by a factor of four or more, you get nothing on life satisfaction. Which is sort of troubling for economists, because things are improving. I once had that conversation with an economist, David Card, at Berkeley—he used to be at Princeton—and asked him, how would an economist measure well-being? He looked at me as if I were asking a silly question and said, "income of course". I said, well what's the next measure? He said, "log income". [laughter] And the general idea is that the more money you have, the more choices you have—the more options you have—and that giving people more options can only make them better off . This is the fundamental idea of economic analysis. It turns out probably to be false, but it doesn't correspond to these data. So Easterlin as an economist caused some distress in the profession with these results.

So what is the puzzle here? The puzzle is related to the affective forecasting that most people believe that circumstances like becoming richer will make them happier. It turns out that people's beliefs about what will make them happier are mostly wrong, and they are wrong in a directional way, and they are wrong very predictably. And there is a story here that I think is interesting.

When people did studies of various categories of people, like the rich and the poor, you find differences in life satisfaction. But everybody looks at those differences is surprised by how small they are relative to the variability within each of these categories. You address the healthy and the unhealthy: very small differences.

Age—people don't like the idea of aging, but, at least in the United States, people do not become less happy or less satisfied with their life as they age. So a lot of the standard beliefs that people have about life satisfaction turn out to be false. This is a whole line of research—I was doing predictive utility, and Dan Gilbert invented the term "affective forecasting", which is a wonderful term, and did a lot of very insightful studies on that.

As an example of the kinds of studies he did, he asked people —I think he started that research in '92 when Bush became governor of Texas, running against Ann Richards—before the election, (Democrats and Republicans), how happy do you think you will be depending on whether Ann Richards or George Bush is elected. People thought that it would actually make a big difference. But two weeks after the election, you come back and you get their life satisfaction or their happiness and it's a blip, or nothing at all.

QUESTION: What about four years later?

KAHNEMAN: And interestingly enough, you know, there is an effect of political events on life satisfaction. But that effect, like the effect of other things like being a paraplegic or getting married, are all smaller than people expect.

COMMENT: Unless something goes really wrong.

KAHNEMAN: Unless something goes terribly wrong. ...

SESSION FIVE

Master Class 2007: A Short Course in Thinking About Thinking Pt. 5 from Edge Foundation on Vimeo.

I'll start with a couple of psychological notions.

There seems to be a very general psychological principle at work here, which is that sometimes when you are asked a question that is difficult, the mind doesn't stay silent if it doesn't have the answer. The mind produces something, and what it produces very characteristically is the answer to an easier but related question. That's one of the heuristics of good problem-solving, but it is a system one operation, which is an operation that takes place by itself.

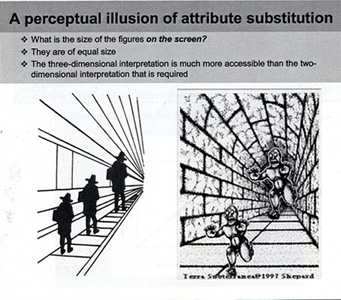

The visual illusions that you have here are that kind of thing because the question that people are asked is, what is the size of the three men on the page? When you look at it, you get a pretty compelling illusion (as it turns out to be) that the three men are not the same size on the page. They are different sizes. It is the same thing with the two monsters. When you take a ruler to them, they are of course absolutely identical. Now what seems to be happening here, is that we see the images three-dimensionally. If they were photographs of three-dimensional objects, of scenes, then indeed the person to the right would be taller than the person to the left. What's interesting about this is that people are not confused about the question that they've been asked; if you ask them how large the people are, they answer in fractions of an inch. They answer in centimeters, not meters. They know that they are supposed to give the two-dimensional size. they just cannot. What they do is give you something that is a scaling of a three-dimensional experience, and we call that "attribute substitution". That is, you try to judge an attribute and you end up judging something else. It turns out that this happens everywhere.

So the example I gave yesterday about happiness and dating is the same thing; you ask people how happy they are, and they tell you how happy they are with their romantic life, if that happens to be what's on the top of their mind at that moment. They are just substituting.

Here is another example. Some ten or fifteen years ago when there were terrorism scares in Europe but not in the States, people who were about to travel to Europe were asked questions like, How much would you pay for insurance that would return a hundred thousand dollars if during your trip you died for any reason. Alternatively other people were asked, how much would you pay for insurance that could pay a hundred thousand dollars if you died in a terrorist incident during your trip. People pay a lot more for the second policy than for the first. What is happening here is exactly what was happening with prolonging, the colonoscopy. And in fact psychologically– I won't have the time to go into the psychology unless you press me—but psychologically the same mechanism produces those violations of dominance, and basically what you're doing there is substituting fear.

You are asked how much insurance you would pay, and you don't know—it's a very hard thing to do. You do know how afraid you are, and you're more afraid of dying in a terrorist accident than you're afraid of dying. So you end up paying more because you map your fear into dollars and that's what you get.

Now if you ask people the two questions next to each other, you may get a different answer, because they see that one contains the other. A post-doc had a very nice idea. You ask people, How many murders are there every year in Michigan, and the median answer is about a hundred. You ask people how many murders are there every year in Detroit, and the median estimate is about two hundred. And again, you can see what is happening. The people who notice that, "oh, Michigan: Detroit is there" will not make that mistake. Or if asked the two questions next to each other, many people will understand and will do it right.

The point is that life serves us problems one at a time; we're not served with problems where the logic of the comparison is immediately evident so that we'll be spared the mistake. We're served with problems one at a time, and then as a result we answer in ways that do not correspond to logic.

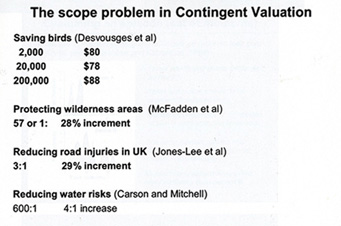

In the case of time, we took the average instead of the integral. And we take the average instead of the integral in many other situations. Contingent valuation is a method where you survey people and ask them how much you should pay for different public goods. It's used in litigation, especially in environmental litigation; it's used in cost-benefit analysis—I think it's no good whatsoever, but this is an example to study.

How much would you pay to save birds from drowning in oil ponds? There is a whole scenario of how the poor birds mistake the oil ponds for real water ponds, and so how much should we pay to basically cover the oil ponds with netting to prevent that from happening. Surprisingly, people are willing to pay quite a bit once you describe the scenario well enough. But one thing is, it doesn't matter what the number of birds is. Two thousand birds, two hundred thousand, two million, they will pay exactly the same amount.

QUESTION: This is not price per bird?

KAHNEMAN: No, this is total. And so the reason is the same reason that you had with time, taking an average instead of an integral. You're not thinking of saving two hundred thousand birds. You are thinking of saving a bird. The emotion is associated with the idea of saving a bird from drowning. The quantity is completely separate. Basically you're representing the whole set by a prototype incident, and you evaluate the prototype incident. All the rest are like that.

When I was living in Canada, we asked people how much money they would be willing to pay to clean lakes from acid rain in the Halliburton region of Ontario, which is a small region of Ontario. We asked other people how much they would be willing to pay to clean lakes in all of Ontario.

People are willing to pay the same amount for the two quantities because they are paying to participate in the activity of cleaning a lake, or of cleaning lakes. How many lakes there are to clean is not their problem. This is a mechanism I think people should be familiar with. The idea that when you're asked a question, you don't answer that question, you answer another question that comes more readily to mind. That question is typically simpler; it's associated, it's not random; and then you map the answer to that other question onto whatever scale there is—it could be a scale of centimeters, or it could be a scale of pain, or it could be a scale of dollars, but you can recognize what is going on by looking at the variation in these variables. I could give you a lot of examples because one of the major tricks of the trade is understanding this attribute substitution business. How people answer questions.

COMMENT: So for example in the Save the Children—types of programs, they focus you on the individual.

KAHNEMAN: Absolutely. There is even research showing that when you show pictures of ten children, it is less effective than when you show the picture of a single child. When you describe their stories, the single instance is more emotional than the several instances and it translates into the size of contributions.

People are almost completely insensitive to amount in system one. Once you involve system two and systematic thinking, then they'll act differently. But emotionally we are geared to respond to images and to instances, and when we do that we get what I call "extension neglect." Duration neglect is an example of, you have a set of moments and you ignore how many moments there are. You have a set of birds and you ignore how many birds there are. ...

SESSION SIX

Master Class 2007: A Short Course in Thinking About Thinking Pt. 6 from Edge Foundation on Vimeo.

The question I'd like to raise is something that I'm deeply curious about, which is what should organizations do to improve the quality of their decision-making? And I'll tell you what it looks like, from my point of view.

I have never tried very hard, but I am in a way surprised by the ambivalence about it that you encounter in organizations. My sense is that by and large there isn't a huge wish to improve decision-making—there is a lot of talk about doing so, but it is a topic that is considered dangerous by the people in the organization and by the leadership of the organization. I'll give you a couple of examples. I taught a seminar to the top executives of a very large corporation that I cannot name and asked them, would you invest one percent of your annual profits into improving your decision-making? They looked at me as if I was crazy; it was too much.

I'll give you another example. There is an intelligence agency, and the CIA, and a lot of activity, and there are academics involved, and there is a CIA university. I was approached by someone there who said, will you come and help us out, we need help to improve our analysis. I said, I will come, but on one condition, and I know it will not be met. The condition is: if you can get a workshop where you get one of the ten top people in the organization to spend an entire day, I will come. If you can't, I won't. I never heard from them again.

What you can do is have them organize a conference where some really important people will come for three-quarters of an hour and give a talk about how important it is to improve the analysis. But when it comes to, are you willing to invest time in doing this, the seriousness just vanishes. That's been my experience, and I'm puzzled by it.

Since I'm in the right place to raise that question, with the right people to raise the question, I will. What do you think? Where did this come from; can it be fixed; can it be changed; should it be changed? What is your view, after we have talked about these things?

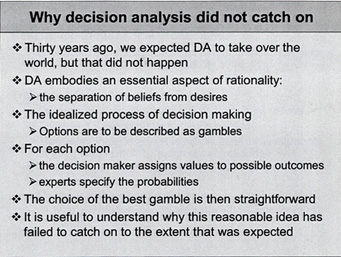

One of my slides concerned why decision analysis didn't catch on. And it's actually a talk I prepared because 30 years ago we all thought that decision analysis was going to conquer the world. It was clearly the best way of doing things—you took Bayesian logic and utility theory, a consistent thing, and—you had beliefs to be separated from values, and you would elicit the values of the organization, the beliefs of the organization, and pull them together. It looked obviously like the way to go, and basically it's a flop. Some organizations are still doing it, but it really isn't what it was intended to be 30 years ago.