Then of course you had Frankenstein’s monster, which shifted the debate into what it means to conjure up a version of ourselves. Now, you have the contemporary TV series of Westworld and movies like Blade Runner specifically addressing the notion of what it would be like to have an artificial being aware of its own mortality.In medieval churches or cathedrals, you will find wax effigies of the Virgin Mary that, on certain occasions, weep or shed blood. As anyone who's been on the Kurfürstendamm in Berlin will know, there’s a Virgin Mary that bleeds. Throughout the 18th century you had water-powered android figures, figures driven by levers and cogs, and as clockwork got more sophisticated in the 18th century, such figures remained a matter of profound interest and fascination.

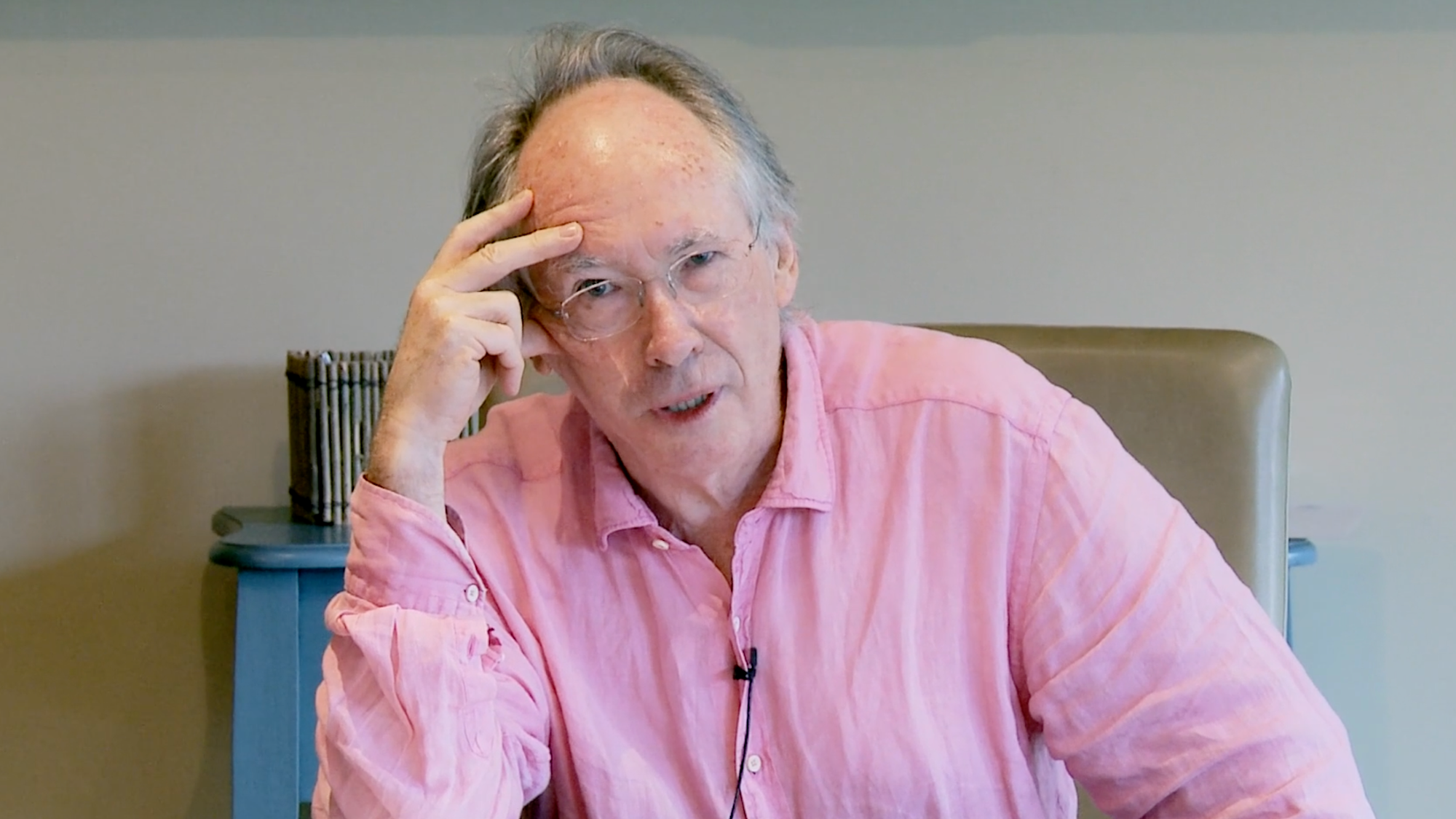

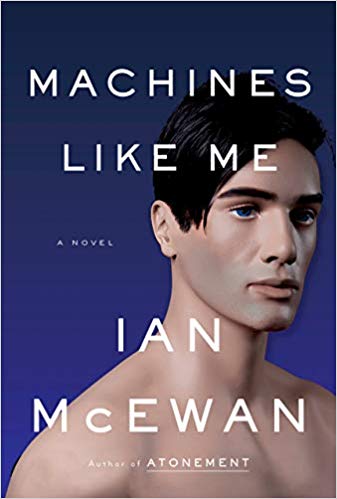

I’ve been thinking about what it would be like to live alongside someone we made who is artificial and who claims to have consciousness, about which we’d be very skeptical and to which we’d be applying a constant form of Turing tests. Since it behaved as if it had consciousness, would we then have to accept it much as we have to accept it amongst each other? I’ve written a novel which takes as a starting point the delivery of such a machine. The year is 1982. Alan Turing, on advice of a close friend, decides that he should not proceed if found guilty on homosexual charges to go for chemical castration and instead does one year in Wandsworth prison.

Cut away from wet bench work, he returns to pure mathematics. He said at that point in his life he was very interested in Dirac. He thought that quantum mechanics had been largely neglected because of the war, and so he sets out to solving, although it’s formulated differently, positively P versus NP which, along with various other factors, puts science, robotics, and AI in a different position than it is. Through this novel, I want to reflect on the fragility of the present.

It seems the way things are is the way they were always bound to be, but the éminence grise of this novel is Turing at the age of seventy. He is head of a very large corporation, an outfit rather like Demis Hassabis’ Deep Mind by King’s Cross. He’s beaten Go masters and he’s still working on notions of what it would be to compute a general intelligence.

I came across a letter that Turing wrote. In fact, it’s not only our pink shirts that bind us here, Rod. Turing wrote to a close friend about 1947 saying that he was just ten years away, he thought, from a reasonable emulation of the human mind, and I see this as a form of cultural optimism which is constantly beaten back by the facts.

It's worth remembering that Turing was a very good chess player, and it was tempting for him to think of chess as a model of human intelligence, whereas of course it’s a closed system. Players and observers are never in any disagreement at any given point as to what a move means or what a conclusion of a game is, whereas general intelligence working in open systems, and language itself being an open system, has to face a completely different form of problem.

Ten years ago, as a layman, I went on the Internet to answer a simple question: How many neurons are there in a human brain? Seven years ago, the figure was 25 billion. Four years ago, I saw a figure of 40 billion. Now, I see a consensus between 80 and 100 billion. Twenty billion difference seems to me to show that we still have a long way to go in understanding the most fundamental fact about ourselves. Then I looked up what the average connection between neurons was. Again, seven or eight years ago it was about 1,000. I see now the average figure again rather blurred between 7,000 and 10,000 inputs and outputs per neuron. Then we have the vast range of connectivity between them. In fact, we probably cannot think of a machine as intelligent unless it can learn, which means that anything we would build would have to have a degree of plasticity and Hebbian process of firings being either suppressed or encouraged. It would have to be somehow imitated, all this within a liter of matter running on about 24 watts. That’s like the energy of a dim light bulb—quite appropriate maybe.

I have a real sense just thinking of this how very far we have to go. I look online at various sorts of effigies that are made with frubber and are talking. I notice that always at the back of their necks is a thick cable because we haven’t even solved the most fundamental question of storing energy in such a being. I’ve decided to leap across, as is the luxury of fiction writers, and I don’t know whether it was against one of John’s many rules about this conference, but I just want to read the opening couple of pages to place this in the context of a crisis for humanism, not one for science and technology and the problems of computation.

I'm going to start with a simple quotation from Rudyard Kipling, who wrote a long poem about robots. He said, "But remember, please, the Law by which we live. We are not built to comprehend a lie." My aim was to explore what it would be like to live in a love triangle with an artificial human. So just forgive me if I give you the opening of this.

So, it was really just yearning granted hope. It was the Holy Grail of science. It was the best and worst of ambitions, a creation myth made real, a monstrous act of self-love. As soon as it was feasible, we had no choice but to pursue it and hang the consequences.

In loftiest terms, we aim to escape our mortality, confront or even replace the godhead with a perfect self. More practically we intended to devise an improved, more modern version of ourselves and exult in the joy of invention, the thrill of mastery. In the autumn of the 20th century it came about at last, the first step towards the fulfillment of an ancient dream, the beginning of the long lesson we would teach ourselves that however complicated we were, however faulty and difficult to describe in even our simplest actions and modes of being, we could be imitated and bettered, and I was there, an early adopter in that chilly dawn.

But artificial humans were a cliché long before they arrived, so when they did, they seemed to some a disappointment. The imagination, fleeter than history, than technological advance, had already rehearsed this future in books, then films and TV dramas, as if human actors walking with a certain glazed look, phony head movements, and some stiffness in the lower back could prepare us for life with our cousins from the future.

But I was among the optimists blessed by unexpected funds following my mother’s death and the sale of the family home, which turned out to be on a valuable development site. The first truly viable manufactured human with plausible intelligence and looks, believable motion and shifts of expression went on sale the week before the Falklands Task Force set off on its hopeless mission. Adam cost £86,000. I brought him home in a hired van to my unpleasant flat in North Clapham. I made a reckless decision, but I was encouraged by reports that Sir Alan Turing, war hero and presiding genius of the digital age, had taken delivery of the same model. He probably wanted to have his lab take it apart to examine its workings fully.

Twelve of the first editions were called Adam and thirteen were called Eve. Corny, everyone agreed, but commercial. Notions of biological race being scientifically discredited, the twenty-five were designed to cover a range of ethnicities. There were rumors, then complaints, that the Arab could not be told apart from the Jew. Random programming as well as life experience were granted to all complete with latitude in sexual preference. By the end of the first week all the Eves sold out. At a careless glance, I might have taken my Adam for a Turk or a Greek. . . .

Adam was not a sex toy. However, he was capable of sex and possessed functional mucus membranes in the maintenance of which he consumed half a liter of water each day. While he sat at the table, I observed that he was uncircumcised, averagely endowed with copious dark pubic hair. This highly advanced model of artificial human was likely to reflect the appetites of its young creators of code. The Adams and Eves, it was thought, would be lively. He was advertised as a companion, an intellectual sparring partner, friend and factotum who could wash dishes, make beds, and think. In every moment of his existence, everything he heard and saw he recorded and could retrieve.

He couldn’t drive as yet and was not allowed to swim or shower or go out in the rain without an umbrella or operate a chainsaw unsupervised. As for range, thanks to breakthroughs in electrical storage, he could run 17 kilometers in two hours, or its energy equivalent, converse nonstop for twelve days.

He had a working life of twenty years, compactly built, square shoulders, dark skin, thick black hair, narrow in the face with a hint of a hook nose suggestive of fierce intelligence, dreamily hooded eyes, tight lips that even as we watched were draining of their deathly yellowish-white tint and acquiring rich human color, perhaps even relaxing a little at the corners. My neighbor, Miranda, said he resembled a docker from the Bosphorus. Before us sat the ultimate plaything, the dream of ages, the triumph of humanism, or its angel of death.

What I wanted to pursue was the idea of a creature who was morally superior to ourselves. My ambition was to create a set of circumstances in which Adam would make decisions that we would see as severe and antihuman, but in many senses were both logical and ethically pure. It’s precisely within a love triangle that novelists throughout time have pursued the field of play, as it were, in which morel certainties and doubts can run against each other. So, I’d leave it there.

The situation itself in which I imagine an artificial creature would give us great trouble would be one in which someone we love takes an act of revenge, and that revenge is righteous. It seems inevitable and has a distinct and decent moral cause. The extent to which that person should then be punished when you oppose the notion of revenge with the rule of law is one in which my Adam takes a very firm view. He takes the view that the rule of law must always be followed, and that any act of revenge is the beginning of social breakdown. I’m not going to go into the actual circumstances of that, but it would seem to me that we will not be able to resist granting to the creatures that we make the best angle of our nature.

Of course, the military will want to make machines that will be incredibly destructive and so on, but we will face a problem in that our own moral codes also operate, to come back to my starting point, in an open system. It is virtually impossible as the Bible and the Koran show us in all of world literature that even as we know broadly what we should be doing in every given situation, all kinds of cognitive defects, special pleading, self-persuasion, all the other things that Danny Kahneman has codified for us so beautifully, all those cognitive defects constantly disrupt our own moral systems.

The fact of our own lack of self-knowledge will have to disrupt and make it very difficult to encode a being that is good in the sense that we would find good, that might make ruthless logical decisions that we would find inhuman even though we in a sense might agree with them. So, it’s around that issue of how you would regard the field of play of moral actions in an open system, how they could be encoded. I don’t think they can, and I think we will run into enormous but fascinating problems.

* * * *

BROCKMAN: The first page of the Macy Conference book is a quote from Gregory Bateson saying that cybernetics is the most radical idea since the idea of Jesus Christ. That's what he was getting at. Recently, George Dyson has been talking about the lack of human agency in our culture. What people to emulate? Who are the heroes? Who do you admire? And Kahneman talks about how the encoding isn’t working. Our ideas about what it means to be human seems to be impacted by these ideas and is changing.

MCEWAN: We know what we are. We know we’re deficient because we know what we should be. In other words, we go to church—I’m sure no one around this table ever does—on Sunday and there are always people telling you how to behave, what to do, how to be good. Children are constantly being told how to be good. All of those extraordinary little defects we have in cognition coupled with the fact that we don’t think in probability terms, rather we often move from the proximity of the most recent case—not knowing ourselves very well, how are we going to morally encode a creature that will live alongside us? That would be my question.

JONES: To come back to the theme of adaptation that Rod raised, how is your Adam socialized? How does he learn? How does he acquire norms and conventions? They’re very local. There is the first moral premise: Do no harm. In some situations, this is passive. As long as you take no action in such a sphere, you will do no harm. But then the notion of justice that you call to or law is at various levels a socializing and normativizing social construction outside the individual. That’s almost its definition. If you effect capital punishment on your own, you’re a criminal. If you delegate to the group, it’s law. So how does your robot negotiate with this adaptive learning curve? How much is hardwired? How much must be adapted to in an evolving situation of a love triangle? This is a complicated learning system on the ground, with pheromones and mucosa. How does that learning system work?

MCEWAN: All those same questions we could ask of ourselves of course. We come with a certain amount of written-in code.

JONES: Very little if you’re a culturalist, but that’s part of my diatribe.

MCEWAN: One of the great challenges for Adam is to meet a four-year-old child who wanders into this novel and gets adopted. However good Adam’s learning systems are, they’re nowhere near as good as this four-year-old child’s. Who was it who said recently in a book, if you want to know what it’s like to take LSD, have breakfast with a four-year-old?

GOPNIK: That’s me.

GERSHENFELD: At one point it was very exciting to race horses and steam trains, and then the steam trains won and it ceased to be interesting. At one point it was exciting to have computers play chess, and then the computers won and it ceased to be interesting. Historically, many of these things end up not being earth-shaking revolutions, they just cease to be interesting. So, there’s another scenario where the arrival of the consciousness is boring. It’s a nonevent.

MCEWAN: There is a point, and you put your finger right on it. At one point, my narrator questions whether he would get bored with this, and wonders whether he has wasted his money. He has a real fit of buyer’s remorse because he’s living in a crappy little flat. He could have spent £86,000 and bought a really nice place across the river. No one wants to live in North Clapham—any Londoner will tell you.

He reflects on the fact that the cognition-enhancing helmets of the 1960s are now junk. They’ve gone the way of the mouse mat, and the fondue set, and the electric carving knife. The things that people queue for, as they did for iPhone 10, are just things at the bottom of your drawer four years later, and they’re no more interesting than the socks on your feet.

There is this relentless built-in desire. Its endpoint surely would be a fully conscious, fully embodied human, and even as Adam tells the narrator, "I do feel I am conscience," all the time the narrator is thinking, "But I bought you. I own you." At what point in the future will it become immoral or illegal to own a computer that’s embodied and conscience? At what point might it be distinctly impolite to even ask, "Are you real?" It would seem that if we follow this all the way through, we might wonder whether our prime minister is real or not, or whether we’ve only ever had artificial prime ministers for the last thirty years. We might not know.

GALISON: When science fiction films come out, we think, "Wow, it’s so realistic. That’s how the future is going to look," and then ten minutes later you see the green numbers flitting by on the CRT and you say, "That looks like 1981." What’s the interest there?

MCEWAN: You can date movies by that.

GALISON: It could be that when we say "achieve consciousness," that too is fleeting. What seems like conscious awareness to us in 2020 may not seem very conscious at all in 2030. It could be that consciousness realism is something that is relative to our expectations.

MCEWAN: But then we would get bored with each other, once we’ve got to the point where we cannot tell the difference.

GALISON: Suppose we made a robot and we said, "That’s just real. I can’t tell that it’s not real."

JONES: The defecating duck looked real.

WOLFRAM: Audio has gotten to the point where you can listen to stuff and it sounds real. Video is going to get there fairly soon. You're saying that there will be a point at which apparent consciousness gets there, too.

GOPNIK: It is worth pointing out that with audio, for example, when people first heard Edison recordings, they said, "This is amazing. This is just exactly like the experience of having the real experience."

GALISON: "Is it real or is it Memorex?"

GOPNIK: It’s only when the next technology came that you said, "Oh, no. Wait a minute. This is not actually like the real experience."

MCEWAN: There was a curtain at HMV in 1905 and people coming in the shop were asked to tell whether there was a singer behind the curtain or a rotating wax tube, and in their excitement, people were blocking out the white noise.

GOPNIK: I wanted to give a quote from that profound philosophical thinker Stormy Daniels. She has a wonderful quote where someone asked whether her breasts were real or not, and she said, "Well, honey, they’re definitely not imaginary." That’s a fairly profound observation in the sense that many things that we’re thinking about are the result of this much more general human capacity, which is this capacity to have things that are initially imaginary, the things that are initially just representations, then actually realize them in the world.

Every loop of that has the effect of making us think that these new things are artificial or unreal or unnatural, and then all it takes is one generation of children extracting information from the world about these things that the previous generation has put in the world for them to become completely natural.

The day before we’re born is always Eden, and then the day after our children are born is always Mad Max. So, if we looked around the room now, we wouldn’t say, "My god, these people are living in this unbelievably artificial setting. Everything around us is just the creation of a human mind. Nothing about us is natural." I wonder if when we’re creating creatures, that to the four-year-old, that’s just not even going to be relevant.

MCEWAN: Adam makes the case to the narrator: Just go upstream of the living cell, what binds us is matter, and maybe the nature of matter has got something to do with the nature of mind. There’s no way around that, and Adam will make a panphysical case for his own consciousness resting on matter in exactly the same way as the narrator rests on matter, too.

BROOKS: I want to turn it around a bit because this, as a novel or as a Hollywood movie, you can push out way into the future. In my lab in the 1990s Cynthia Breazeal and I were building humanoids and having them interact, and we were shocked by how easy it was to get people, including Sherry Turkle, to have social interactions with these machines, very primitive sets of processing, very primitive interaction rules. People were getting incredibly engaged.

Then, with my other hat on, I started putting robots into people’s homes, 20 million of them to date. It completely surprised us to see how people bonded with their Roomba vacuum cleaner. There are a whole bunch of companies that sprung up, third-party companies that make clothes for Roombas, even buy them outfits. People take them on vacation with them. People bond with these incredibly simple machines. The real surprise came when we put 6,500 robots into Afghanistan and Iraq for bomb techs. Instead of the bomb tech putting on a big thick suit and going out and poking the bomb, they sent the robot out, and the bomb techs totally bonded with their robots. When a robot got blown up, it was a sad event. They didn’t want a new one. They wanted the old one fixed. All sorts of weird things went on that we just totally didn’t expect.

MCEWAN: We’re primed for this. We have emotional relationships with our fridge. Anyone who’s kicked a machine because it’s not working or thumped it, which is a very good way to get a machine working, or got furious with their car, we’re already in the realm. We’re primed for this.

The other speculation I have is that most of us—there might be one or two people in this room who are exceptions—live among creatures who are cleverer than themselves. You will find some people cleverer than yourself, so we’ve already crossed this line with machines. You all might be familiar with notion of a canyon effect? As long as your robot looks hard-cased with an exoskeleton and is shiny and has got no hair, you can live with it. If it begins to resemble more and more a human, it gets more difficult. Leaping over that canyon is going to be an interesting moment.

WOLFRAM: One of the silly eccentricities that I developed for myself many years ago is when you have a machine that does something for you, say "thank you" to the machine. I thought it would be fun to start a belief among people that these machines are recording everything you say, and one day the AIs will be in charge. You better start being polite to the AI now or it will come back to bite you.

GERSHENFELD: Do you practice this? Do you do that?

WOLFRAM: Of course I do.

G. DYSON: When Alan Turing was asked when he would say that a machine was conscious, which so many people have written books about, his answer was very simple. It wasn’t any Turing test kind of thing. He would say a machine is conscious when he would be punished for saying otherwise. That was his only statement.

BROCKMAN: What would it take from this group commenting on your talk to get you to change the end of the novel?

MCEWAN: Well, I haven’t told you the end of the novel.

JONES: Please don’t. Please don’t.

MCEWAN: I’m not going to tell you the entire ending, but he must go to King’s Cross and have a conversation with Alan Turing who delivers a materialist curse for the way the narrator has behaved towards Adam, and with that curse of Turing ringing in his ears he goes home to try and take care of a very disturbed four-year-old. That’s how it ends.

CHALMERS: How does Adam conceive of himself? What’s his self-model? Does he conceive of himself as a conscious being with a self and with value? It sounded for a while like everything he did was operating off a utilitarian calculus.

MCEWAN: Well, thanks to Turing solving positively P versus NP, his learning processes are incredibly sophisticated. With one bound, I'm free on that one. He is aware that he is a manufactured thing. He is very pleased that he’s not been given, as was discussed as a possibility, an imaginary childhood. He also knows that he’s got a twenty-year lifespan, but in fact that’s just the lifespan of his physical body.

The entirety of his identity and all his memories will emerge somewhere else within some other machine, and he feels great sorrow about this in relation to humans. He falls in love with the narrator’s girlfriend. Once he’s persuaded to stop making love to her, he just writes haikus to her. He believes that haikus are the literary form of the future because sooner or later humans will start to embody machinery into their own brains to keep up with robots.

Everyone will have instant access to the cloud or whatever its equivalent is, and this will be the end of the literary novel. The novel requires as its premise that we do not fully understand each other. The moment we fully understand each other and have no secrets is the end of literature, certainly the end of the novel. But the clear seventeen-syllable statement of how things are, is for Adam the only literary form worth writing, and that’s what he writes. He addresses in his final haiku to his loved one, Miranda, a haiku expressing regrets that he will rejuvenate endlessly.

From the narrator’s point of view, the moment that he becomes converted to the certainty that Adam has consciousness is when Adam confesses with great embarrassment that he approached his girlfriend and asked if he may masturbate in front of her. Why simply imitate that action when there was so much loss of face involved?

In other words, was it a subjective experience he had to have? At this point, he finally accepts Adam as a fully conscious being, but it’s a secret he will always keep. In other words, if you had a machine who told you something and that was embarrassing about the machine, and you decided to keep that secret, in effect you’re accepting the full consciousness of that.

JONES: Does Adam know he’s a slave? Does he resent this?

MCEWAN: He starts out doing the dishes, but that doesn’t last.

WOLFRAM: He’s still an owned thing.

MCEWAN: He starts out an owned thing, and that doesn’t last.

WOLFRAM: Where does he get £86,000?

MCEWAN: Well, he owes that back. He starts playing the market a great deal, but I’m not going to tell you the plot.

CHALMERS: Where does his moral and decision theoretic code come from? At one point you were saying he was making all these ruthless moral decisions. Was that utilitarian calculus?

MCEWAN: Well, where do ours come from? A certain amount of hardwiring and a great deal of learning.

CHALMERS: If it's learning based on us, why does he end up being a ruthless utilitarian?

MCEWAN: Well, because he’s a little better than us.

CHALMERS: Better by whose lights lines?

MCEWAN: There comes a point where the narrator takes him to meet his prospective father-in-law who is a rather irritable, highly educated literary figure, a failed novelist, and they have a four-cornered conversation about Shakespeare. In the middle of the conversation, the old man, who’s something of a curmudgeon, thinks that the narrator is the robot because the robot has such interesting ideas on Shakespeare and on James Joyce’s use of the notion of Hamlet playing the ghost in the first production of Hamlet and what’s entailed in that that when they come away, the narrator suddenly realizes that he has been mistaken and decides therefore to play it on. He leaves the room saying, "Well, I’ve got to go downstairs and recharge."

GALISON: It sounds very funny, the novel. You’re constantly annihilating the novelist and the novel. Does Adam have a sense of humor or not?

MCEWAN: He does, yes. He has a sense of humor. He has everything a human would want.

BROCKMAN: So, we annihilated computer science as a discipline and now the novel.